How to Build a Lightweight AI Image Editor with FastAPI & Nano Banana (Gemini 2.5 Flash Image)

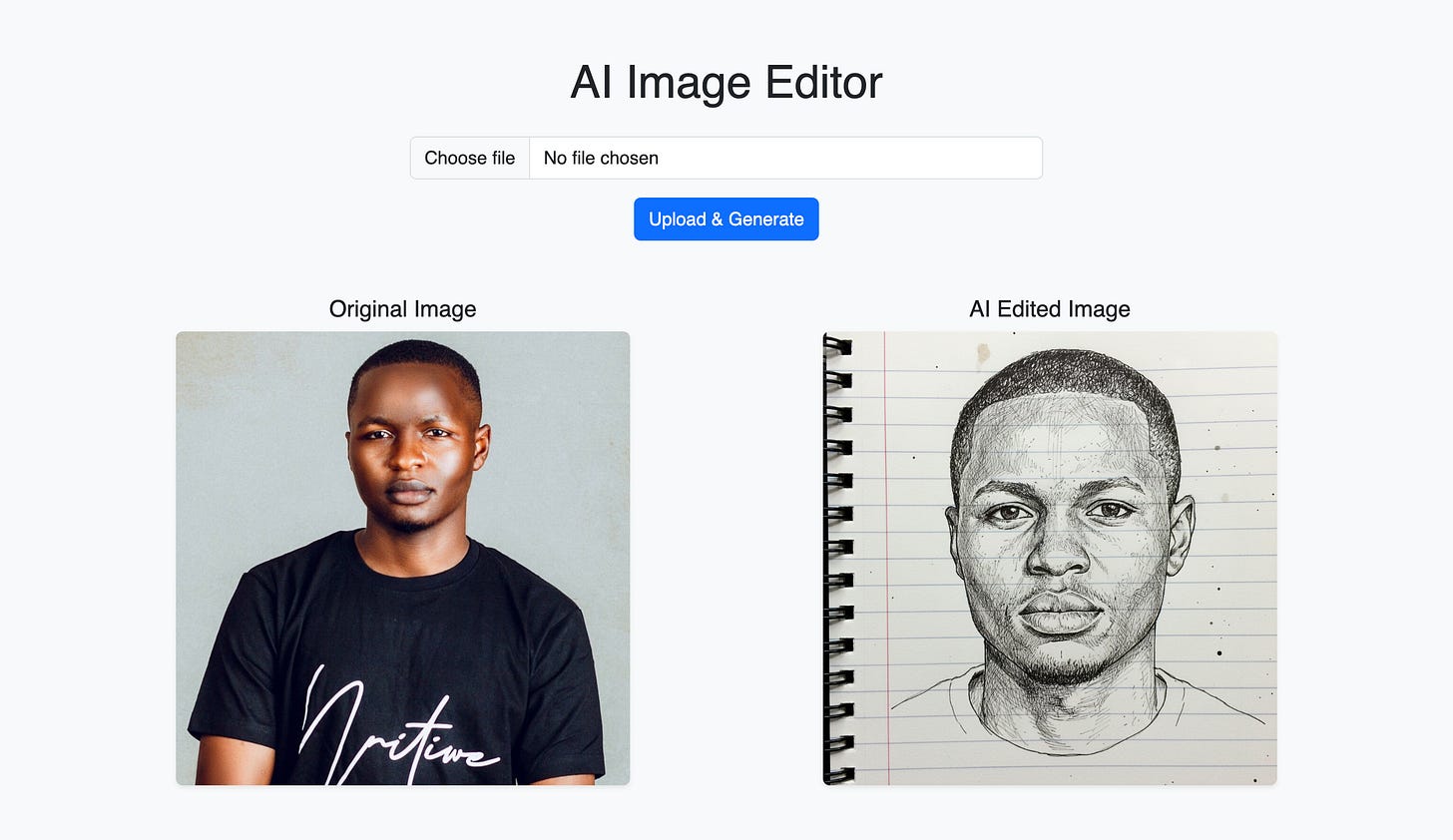

In this hands-on guide, we’ll build a complete web-based image editor that transforms any portrait into a detailed black-and-white ink sketch.

Over the last three years, Generative AI for images has evolved from a fascinating research demo to a tool that powers real-world businesses.

E-commerce stores now edit thousands of product photos in seconds, removing messy backgrounds, swapping seasonal props, or turning flat catalog shots into lifestyle scenes (without hiring a single photographer). Social media managers generate consistent character illustrations across campaigns. Indie game developers create concept art faster than they can sketch it by hand.

What used to take hours in Photoshop now happens in one API call.

Improvements in Image Generation

The biggest hurdles that once plagued image generation, such as distorted hands, inconsistent faces, and the inability to precisely control what stays and what changes, have largely been solved. Modern multimodal models can now interpret an uploaded reference photo, capture every facial detail, and perform artistic transformations with precise accuracy. This is the new standard of professional image editing.

This leads to the introduction of the Nano Banana AI model (Gemini 2.5 Flash Image—Google’s remarkably capable multimodal model, released in 2025). For users who need fast, reliable, one-shot edits without burning through tokens or GPU credits, Nano Banana is fast becoming the go-to choice.

In this hands-on guide, we’ll build a complete web-based image editor that transforms any portrait into a detailed black-and-white ink sketch, preserving identical facial proportions, expression, and gaze direction, as if an artist had spent twenty minutes on a notebook page.

You’ll deploy it locally in under ten minutes, and I’ll show you how to take it to production for free.

Let’s get started.

What Exactly is Nano Banana?

Nano Banana is the community nickname for Gemini 2.5 Flash Image, a multimodal foundation model accessible via Google’s Gemini API. It’s designed for speed and precision in multiple generative tasks (including but not limited to image-to-image manipulations).

At its core, the model is a 2.5-billion-parameter vision-language powerhouse trained on billions of image-text pairs. You send it a reference photo plus a natural-language instruction:

“turn this into a Renaissance oil painting,”

“remove the sunglasses and add round tortoiseshell glasses,”

“make this product float in a minimalist white studio with soft shadows”—and it returns a new image that obeys both the visual input and the text.

Key capabilities that make it stand out:

One-shot editing: No fine-tuning, no LoRAs, no ControlNet rigging. Upload → prompt → done.

Photorealistic and artistic fidelity: It can preserve identity across wild style changes.

Object addition/removal/replacement: Insert a coffee mug into a hand, erase a logo, and swap clothing accurately.

Detail modification: Change lighting, weather, time of day, emotional expression, and age appearance.

Scene extension: Expand beyond the original borders (outpainting) with seamless continuation.

You can explore the official model here.

Why is Nano Banana a Great Choice?

Having tested a wide range of Generative AI Image models, Nano Banana has shown considerable promise since its release in three key areas, making it an excellent choice for production and real-world integration.

Identity preservation – When you ask it to “keep the face 100 % identical,” it actually does. Many existing models drift proportions or soften features.

Fine-line artistic styles – Pencil sketches, ink drawings, and technical illustrations come out crisp. Many models blur thin lines into watercolor mush.

Speed + token efficiency – 2–3× faster than adjacent models while offering 60 % cheaper for the similar resolution.

Prompt Engineering Basics with Nano Banana

Generative AI models process instructions for task execution via prompts (instructions written in natural language). These multimodal models have “creative license” control and tend to perform differently based on the quality of instructions received.

When you give it vague instructions, it cranks that control to 11.

“Make this image a sketch,” → the model thinks: “Ah, freedom! Let’s reinterpret the nose, soften the skin, add anime eyes—fun!”

This means that to get the best out of these Generative AI models, users need to master the art of crafting effective prompts (see the official documentation for a structured introduction to image prompting).

One golden rule for prompting is to be explicit about what must stay the same.

A weak prompt:

“Turn this into a pencil sketch.”

A strong prompt:

“Create a photo-style line drawing/ink sketch of the faces identical to the uploaded reference photo — keep every facial feature, proportion, and expression exactly the same. Use black and white ink tones with intricate, fine line detailing, drawn on a notebook-page style background with faint blue horizontal lines.”

Notice the repetition of “identical” and “exactly the same.” Nano Banana respects that language.

To keep things simple, we’ll use this exact prompt in our image editor tutorial.

What You’ll Need to Build the Editor

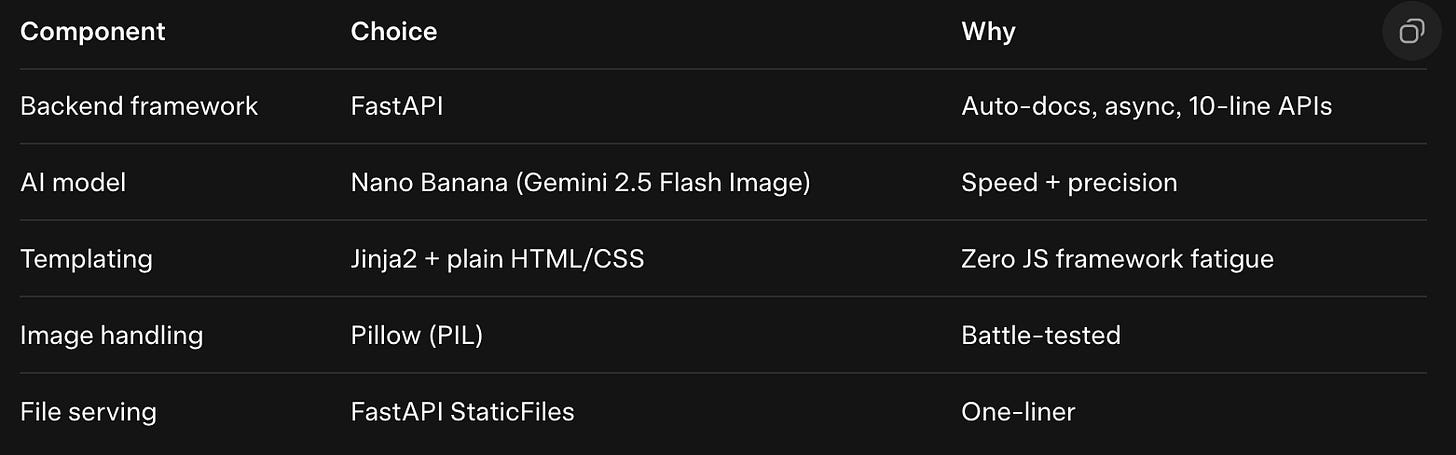

We’re keeping the stack intentionally minimal:

Do not worry if you have no experience with “web development & templating”. I’ll also show a one-click Streamlit alternative at the end of this guide.

Why FastAPI?

FastAPI is a modern, fast (high-performance), web framework for building APIs with Python based on standard Python type hints. We chose FastAPI for its ease of handling file uploads, automatic API documentation, which is great for testing, and its asynchronous capabilities, which help in serving images efficiently. In this guide, we will leverage these features to build and deploy our web-based AI image editor.

If you're new to FastAPI and want to learn more, I wrote a comprehensive guide on documentation best practices here:

Step-by-Step Guide to Create GenAI Image Editor

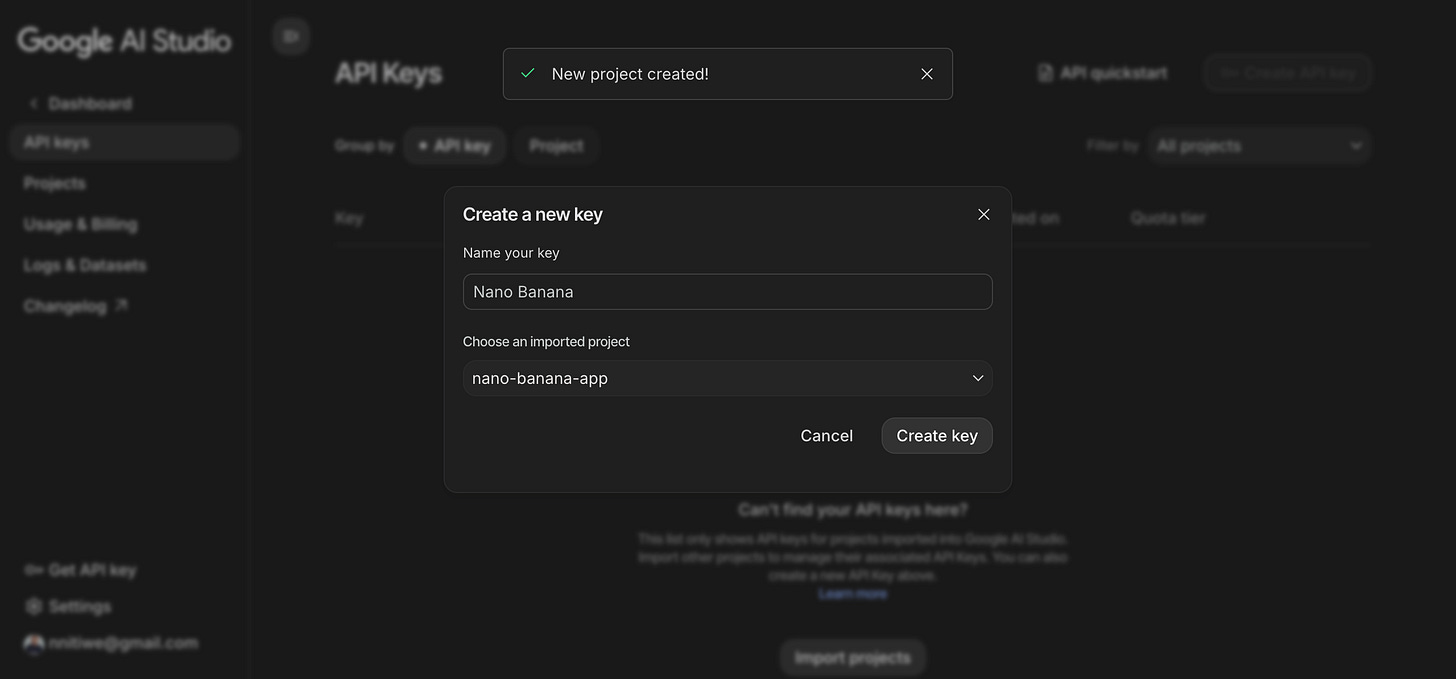

Step 1 – Grab Your Gemini API Key

Create a new key → copy it

Create a

.envfile in your project root:NANO_BANANA_API_KEY=your_actual_key_here

Step 2 – Install Dependencies

pip install fastapi uvicorn python-multipart pillow google-genai python-dotenv jinja2Step 3 – Folder Structure

codelab_13/

├── main.py

├── ai/

│ └── editor.py

├── routers/

│ └── image_routes.py

├── static/

│ ├── css/

│ │ └── style.css

│ ├── uploads/

│ └── generated/

└── templates/

└── index.htmlCreate the folders exactly as shown. FastAPI will serve everything under /static automatically.

Step 4 – Implementing the Core AI Feature

Let’s write the function that talks to Nano Banana.

ai/editor.py

from PIL import Image

from google import genai

from io import BytesIO

from dotenv import load_dotenv

import os

load_dotenv()

client = genai.Client(api_key=os.getenv(”NANO_BANANA_API_KEY”))

OUTPUT_FOLDER = “static/generated”

os.makedirs(OUTPUT_FOLDER, exist_ok=True)

def edit_image(image_path: str) -> str:

prompt = “”“

Create a photo-style line drawing/ink sketch of the faces identical to the uploaded reference photo —

keep every facial feature, proportion, and expression exactly the same. Use black and white ink tones

with intricate, fine line detailing, drawn on a notebook-page style background with faint blue lines.

“”“

output_name = f”{OUTPUT_FOLDER}/{os.path.basename(image_path).split(’.’)[0]}_sketch.png”

input_image = Image.open(image_path)

response = client.models.generate_content(

model=”gemini-2.5-flash-image”,

contents=[input_image, prompt],

)

# The model returns the image in inline_data

for part in response.candidates[0].content.parts:

if part.inline_data:

img = Image.open(BytesIO(part.inline_data.data))

img.save(output_name)

return output_nameKey points:

We pass

[image, prompt]as a list—order matters.The response hides the bytes inside

inline_data.data.We save directly into static/generated so FastAPI can serve it instantly.

Step 5 – FastAPI Routes – Glue Everything Together

routers/image_routes.py

from fastapi import APIRouter, Request, UploadFile, File

from fastapi.responses import HTMLResponse

from fastapi.templating import Jinja2Templates

import shutil, os

from ai.editor import edit_image

router = APIRouter()

templates = Jinja2Templates(directory=”templates”)

UPLOAD_FOLDER = “static/uploads”

os.makedirs(UPLOAD_FOLDER, exist_ok=True)

@router.get(”/”, response_class=HTMLResponse)

async def index(request: Request):

return templates.TemplateResponse(”index.html”, {”request”: request})

@router.post(”/upload”, response_class=HTMLResponse)

async def upload_image(request: Request, file: UploadFile = File(...)):

# Save uploaded file

file_path = os.path.join(UPLOAD_FOLDER, file.filename)

with open(file_path, “wb”) as f:

shutil.copyfileobj(file.file, f)

# Generate sketch

ai_path = edit_image(file_path)

return templates.TemplateResponse(”index.html”, {

“request”: request,

“original_image”: f”/{file_path}”,

“ai_image”: f”/{ai_path}”

})main.py

from fastapi import FastAPI

from fastapi.staticfiles import StaticFiles

from routers import image_routes

app = FastAPI()

app.mount(”/static”, StaticFiles(directory=”static”), name=”static”)

app.include_router(image_routes.router)

# Run with: uvicorn main:app --reloadStep 6 – The Frontend – Minimal but Responsive

templates/index.html uses Bootstrap 5 + a touch of custom CSS. The magic is in two Jinja2 variables:

{% if original_image %}

<img src=”{{ original_image }}” ...>

<img src=”{{ ai_image }}” ...>

{% endif %}When the POST request finishes, we re-render the same page with those variables populated. No JavaScript required.

The full HTML is available in the GitHub repository below.

Step 7 – Run It Locally

uvicorn main:app --reloadOpen http://127.0.0.1:8000

Upload a selfie → watch Nano Banana deliver a perfect ink sketch in ~2 seconds.

Full source code (including style.css):

https://github.com/nnitiwe-dev/youtube-codelabs/tree/main/codelab_13

Want to understand how Jinja2 + FastAPI work under the hood? I explained it step-by-step in part 2 of this series:

Step 8 – Deploying to the Internet (Free)

You now have a web-ready image editor. Push it to Vercel in 5 minutes:

Create a repo and push codebase (

git init && git add . && git commit -m “first”)Connect repo to Vercel → choose “FastAPI” template

Add your

NANO_BANANA_API_KEYin Project Settings → Environment VariablesDone.

I wrote a zero-downtime deployment guide here:

[Optional] Streamlit Alternative (If HTML Feels Heavy)

Add this file streamlit_app.py:

import streamlit as st

from ai.editor import edit_image

import os, shutil

st.title(”Nano Banana Sketch Artist”)

uploaded = st.file_uploader(”Upload portrait”, type=[”png”, “jpg”])

if uploaded:

with open(”temp.jpg”, “wb”) as f:

f.write(uploaded.getbuffer())

result = edit_image(”temp.jpg”)

col1, col2 = st.columns(2)

col1.image(uploaded, caption=”Original”)

col2.image(result, caption=”Ink Sketch”)Run streamlit run streamlit_app.py → instant app, no HTML knowledge needed.

Conclusion

In under 150 lines of code, you’ve built a state-of-the-art image editor that rivals $50/month SaaS tools. That’s the power of modern foundational models combined with Python’s incredible ecosystem.

You now understand:

How Nano Banana achieves one-shot edits with identity preservation

Prompt engineering patterns that actually work

How to wire FastAPI + Jinja2 into a polished web app

Deployment paths that cost literally nothing

This same pattern scales effortlessly: simply add background removal or a clothing swap by changing a single prompt. The heaviest lifting is already done by Google’s servers.

I’m currently pouring everything I’ve learned into AI Photo Genie—a consumer app that lets anyone fix backgrounds, upscale to 4K, restore old photos, and relight portraits with one click.

Be the first to try it: https://aiphotogenie.nnitiwe.io/

Join the waitlist, and I’ll notify you once the MVP is deployed.

Until next time—keep building, keep shipping.