Building GenAI Apps #8: Model Context Protocol (MCP) Explained – See It in Action

The REST API for AI — a universal way to connect models to powerful tools and data.

TL;DR — Model Context Protocol: The REST API for AI — a universal way to connect models to powerful tools and data. Learn what it is, how the pieces fit, and run a short demo.

In our previous installment of the "Building GenAI" series, I covered a comprehensive guide to LLM function and tool calling, explaining how these features enable large language models (LLMs) to interact with external systems in an organized way.

If you haven't read that yet, I would recommend you check it out here for foundational context.

This week, I focus on the Model Context Protocol (MCP), a growing standard that's changing how AI applications interact with their environment.

Model Context Protocol: The REST API for AI — a universal way to connect models to powerful tools and data.

We'll unpack what MCP is, why it matters in the generative AI space, and I will guide you through a hands-on tutorial to see it in action. By the end, even if you're new to AI engineering, you'll understand how MCP bridges the gap between isolated models and real-world utilities, setting the stage for more sophisticated GenAI apps.

Understanding MCP

Large language models are powerful for generating text, answering questions, or writing code, but they have a key limitation: they can’t directly interact with external systems. For example, an LLM can suggest a task for your project management tool like Asana, but it can’t add that task to Asana, update a database, or save a file to Google Drive on its own. It’s like having a brilliant assistant who can give advice but can’t pick up the phone to make a call.

It’s like having a brilliant assistant who can give advice but can’t pick up the phone to make a call.

In our last article, we discussed how function calling addresses this by letting developers define custom code tools—think of them as mini-programs—that the model can trigger with structured JSON outputs.

For instance, you might define a tool to check the weather, and the model outputs {"tool": "get_weather", "city": "Lagos"}, which your app then uses to fetch data from a weather API.

While function calling is a step forward, it’s not perfect. Writing custom tools for every integration—whether it’s connecting to a payment system, a calendar, or a database—takes time and effort. It’s like coding your own data analysis functions instead of using a library like pandas, which already has tested, ready-to-use solutions. You end up managing authentication, handling errors, and ensuring compatibility, which can slow down development and make your app harder to maintain.

This is where the Model Context Protocol (MCP), introduced by Anthropic (in late 2024), comes in. MCP is an open standard that simplifies how AI applications communicate with external tools and data sources. Think of it as a universal adapter: instead of building custom connections for every service, MCP provides a standardized way for AI apps to access tools, data, and templates from various systems. It’s designed to be secure, efficient, and flexible, allowing developers to focus on creating features rather than wrestling with integrations.

Why should you care about MCP?

First, it saves time. By using MCP, you can tap into pre-built servers from companies like Google, PayPal, or Cloudflare, which offer tools for tasks like managing files or processing payments. Second, it’s gaining traction.

Major players like OpenAI have integrated MCP into their APIs, and services like Stripe, HubSpot, and Shopify are adopting it, building a growing ecosystem of compatible tools. For beginners, MCP means you can experiment with powerful integrations without deep coding knowledge.

For advanced developers, it offers a scalable way to build apps that work across multiple services. As AI apps increasingly need to handle real-world tasks—like automating research or syncing with business tools—MCP is becoming a go-to solution for making those connections seamless and reliable.

How MCP Works: The Architecture

To understand MCP, let’s break down its structure. At its core, MCP is a client-server system built on JSON-RPC 2.0, a protocol for exchanging structured messages.

It has two layers:

The data layer, which defines what’s exchanged (like tools or data), and

The transport layer, which handles how those exchanges happen (locally via standard input/output or remotely over the internet).

This setup makes MCP versatile, whether you’re running everything on your laptop or connecting to cloud services.

The main players in MCP are the MCP Host, MCP Client, and MCP Server.

MCP Host

The host is the AI application you’re interacting with, like a chatbot or a coding tool in your IDE. For example, a productivity app might need to check your calendar, query a database, and send an email.

The host doesn’t do these tasks itself; it relies on MCP clients to connect to servers that provide the needed capabilities.

MCP Client

An MCP client is like a messenger. Each client maintains a one-to-one connection with a specific server, handling tasks like setting up the connection and passing requests back and forth.

Clients can also offer their own features, called primitives, to servers.

Sampling lets a server ask the host’s LLM to generate text, keeping the server lightweight and model-agnostic.

Elicitation allows the server to ask the user for input, like confirming an action (“Do you want to delete this file?”).

Logging lets servers send debug messages to the client, helping developers track what’s happening.

These features make interactions dynamic—for example, a server might pause a task to get user approval, ensuring safety and control.

MCP Server

MCP servers, on the other hand, are where the action happens. They can run locally (like a server accessing your computer’s files) or remotely (like one hosted by Google for Drive access).

Servers provide their own primitives:

Tools for actions, like running a database query or fetching a webpage;

Resources for data, like a list of calendar events or a project’s files; and

Prompts for templates that guide the LLM, such as a pre-set format for summarizing data.

For example, a server might offer a tool to search flights, a resource with airport codes, and a prompt to structure travel plans. Servers can also send real-time notifications, like when new tools become available, keeping everything in sync.

This structure—hosts coordinating clients, clients connecting to servers, and servers providing tools and data—makes MCP powerful. It’s like a well-organized team: each part has a clear role, and together they enable your AI app to handle complex tasks without custom-built connections for every service.

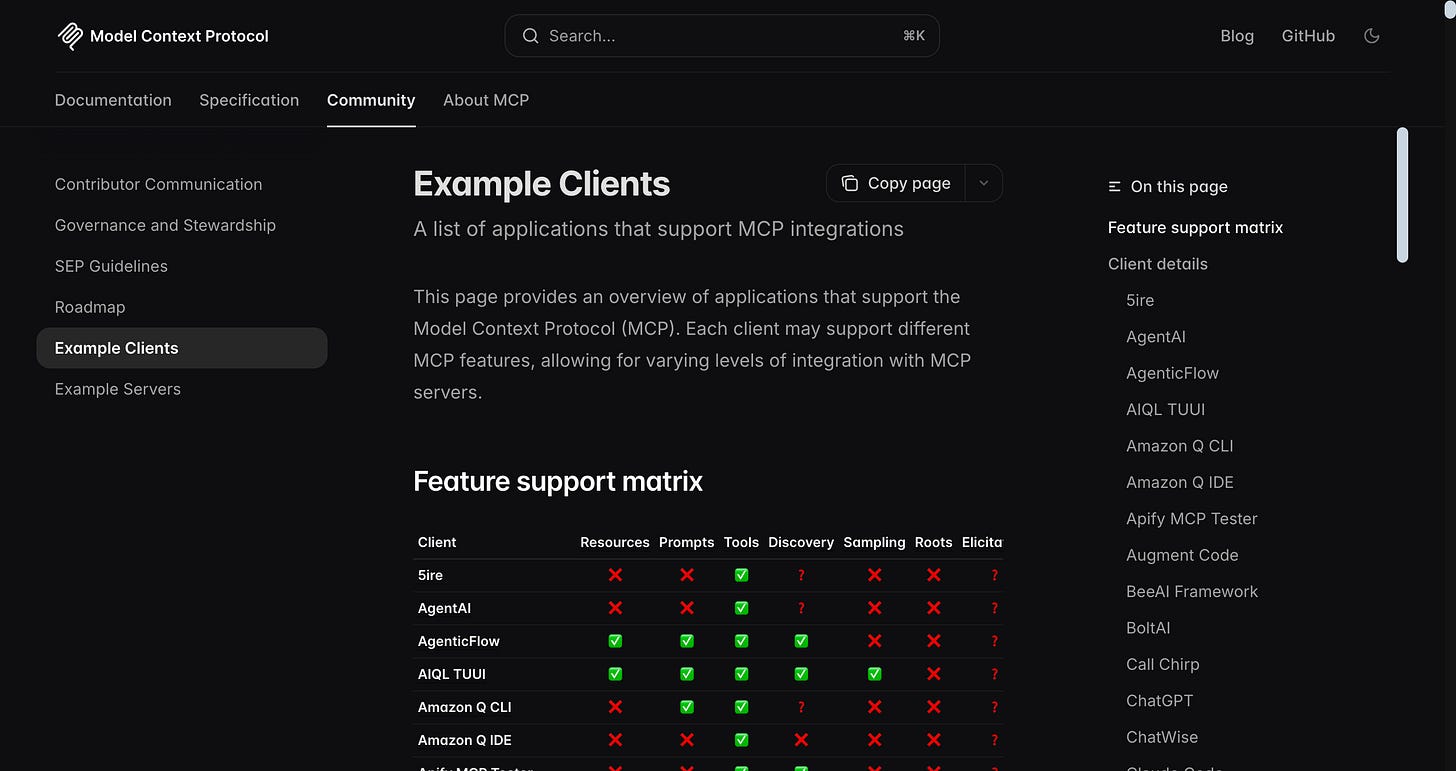

Exploring MCP Clients

MCP clients are the bridge between your AI app and the servers providing tools and data.

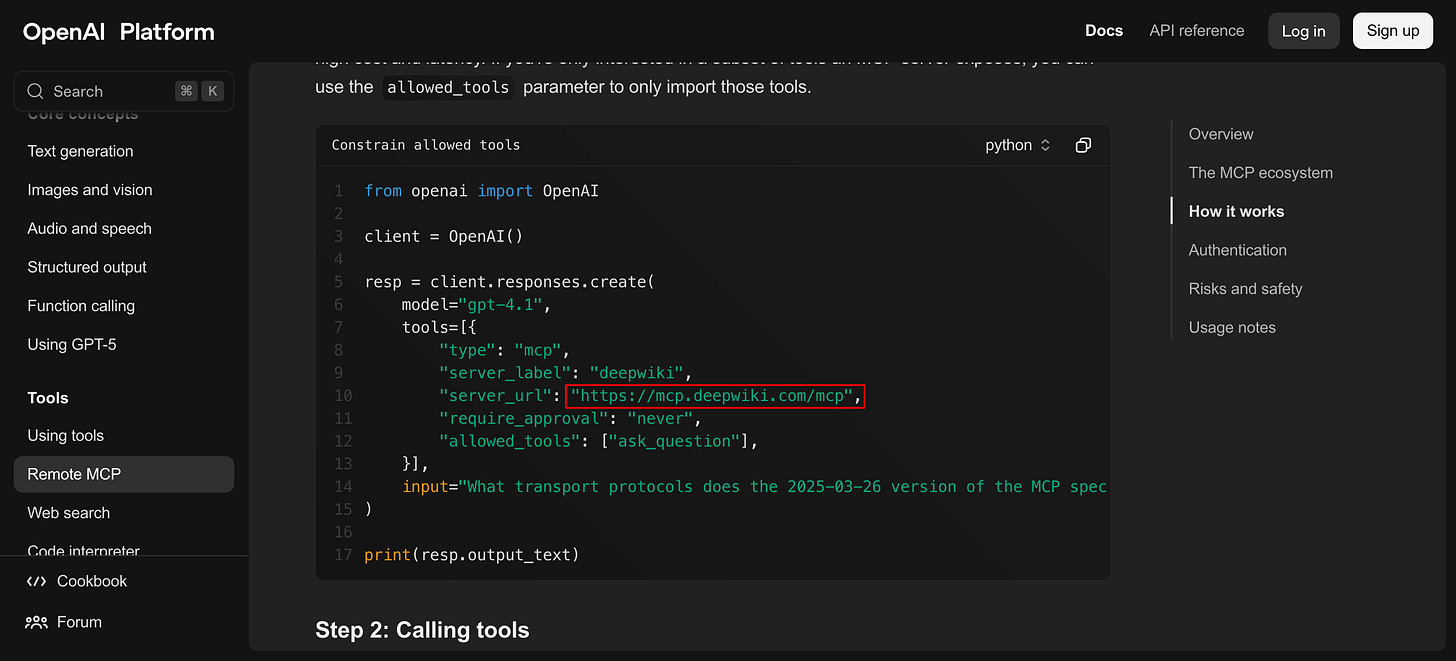

1. OpenAI’s MCP Client

OpenAI has made this easy by embedding MCP support in its Responses API, letting models like GPT-4.1 use remote MCP servers as tools. You simply specify an MCP server’s URL in your API call, and the client handles the rest—discovering available tools, sending requests, and returning results.

There’s no extra cost beyond the tokens used for the API call, making it accessible for web-based projects. For example, you could connect to a server that fetches stock prices, and the model would use its tools to get real-time data without you writing the API logic yourself.

2. Other MCP Clients

The MCP ecosystem extends beyond OpenAI. Tools like Zencoder, a coding assistant for IDEs, act as MCP clients, letting you connect to servers for tasks like Git operations or ticket management in Jira. Other clients, like Anthropic’s Claude Desktop, support similar integration with user-friendly interfaces for non-coders.

These clients enable experimentation with MCP without requiring deep technical knowledge, allowing beginners to use Claude and pull data from a server into a chat interface.

3. Building MCP Clients from Scratch

For those wanting to build custom clients, Anthropic’s documentation offers step-by-step guides, covering everything from SDK setup to handling primitives.

We’ll explore building clients in a future article, but for now, know that existing clients make MCP accessible to everyone.

Understanding MCP Servers

MCP servers are the workhorses that provide the tools, data, and templates your app needs.

1. Remote MCP Servers

Remote servers, hosted by companies like Cloudflare or PayPal, are the easiest to use—you just point to their URL.

For example, Stripe’s server might offer tools for processing payments, while Cloudflare’s could handle network requests. These servers are ready-made (hosted and maintained by the respective companies), saving you from building complex integrations.

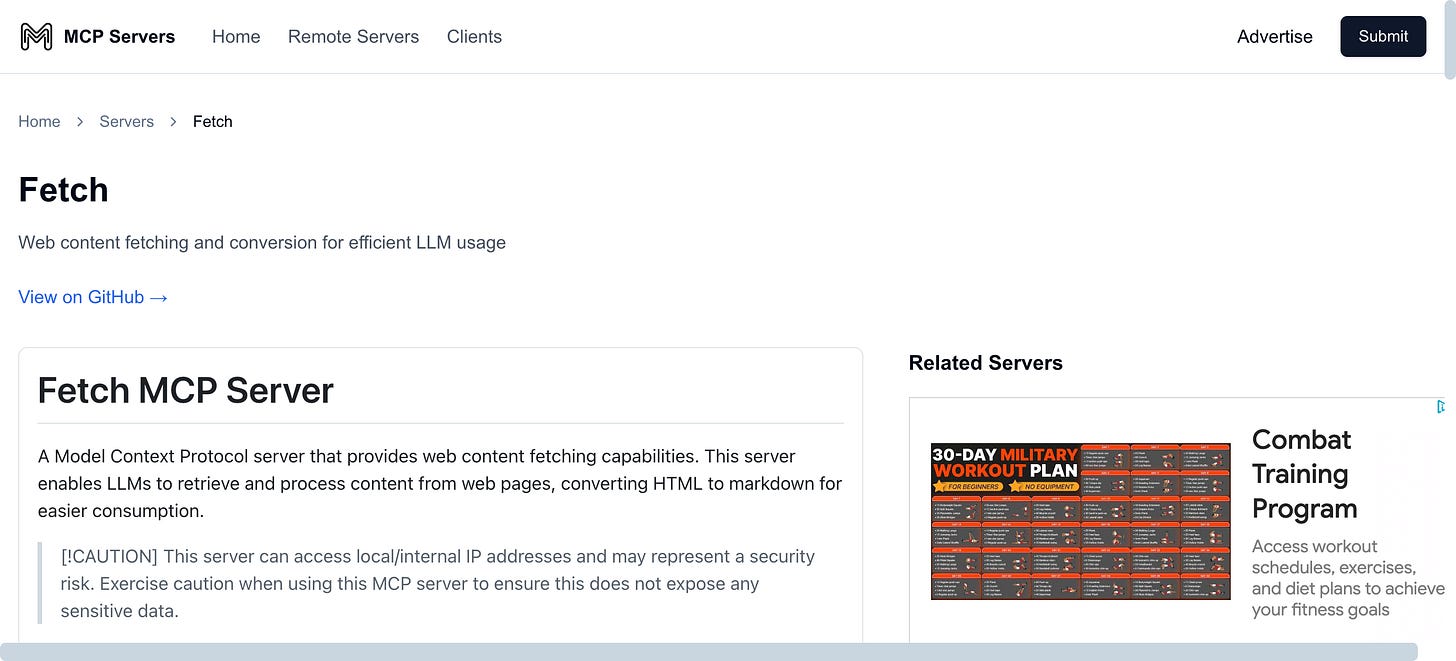

2. Self-host Prebuilt MCP Servers

If you prefer more control, you can self-host prebuilt servers from MCP stores like GitHub or marketplaces like mcpmarket.com. For instance, a filesystem server from GitHub lets you access local files, while a database server might connect to your SQL setup.

These are often simple to deploy, running locally or on self-hosted servers.

3. Building MCP Servers from Scratch

Building your own server is also an option, and Anthropic’s tutorials walk you through defining tools and resources using SDKs in languages like Python or JavaScript.

This will be a topic for a later article, but it’s worth noting that MCP’s standardized approach makes custom servers easier to create than bespoke integrations. Whether you use a remote server or host your own, MCP servers enable your app to access real-world capabilities with minimal effort.

Hands-On: Trying MCP with a Simple Demo

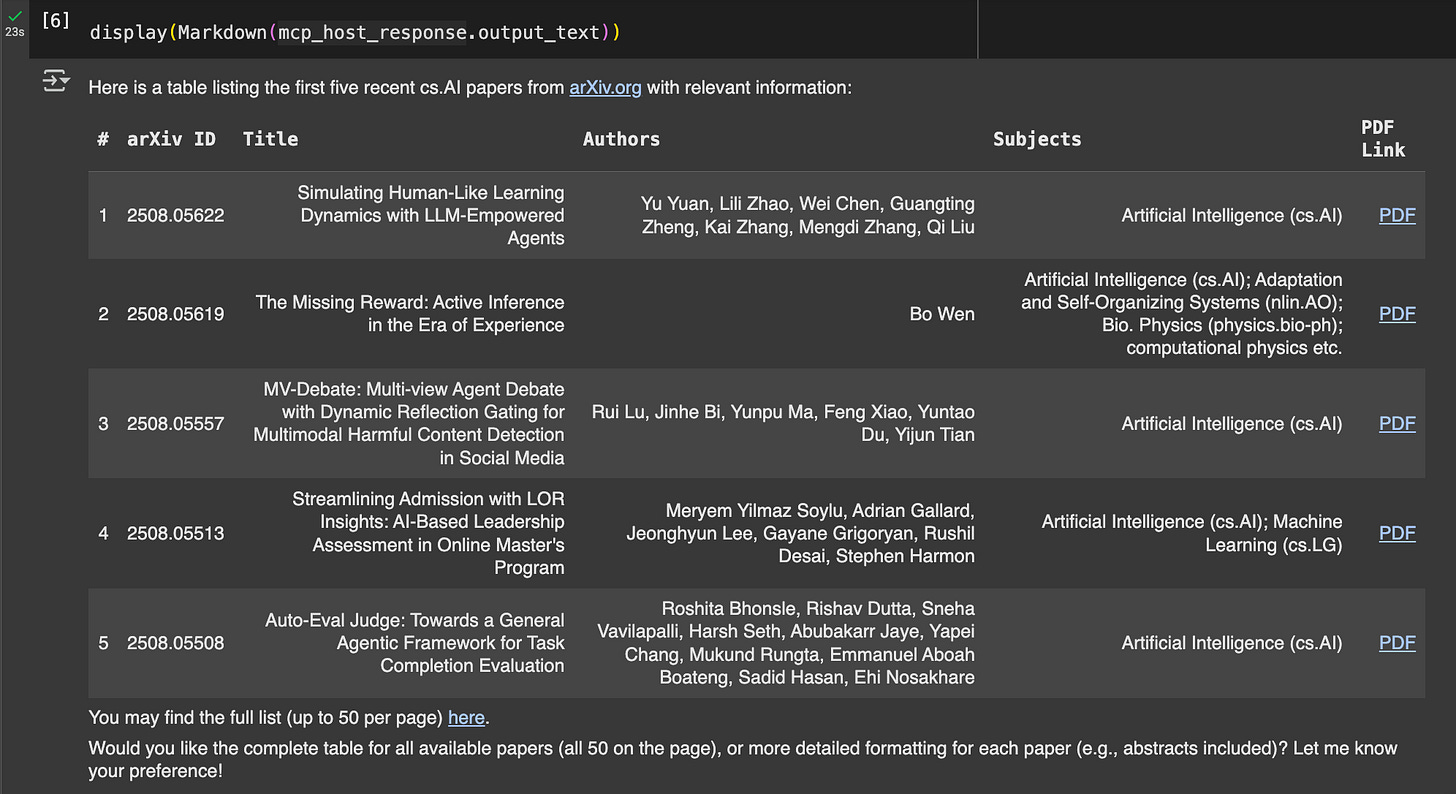

Let’s see MCP in action with a quick example. We’ll use OpenAI’s client to connect to a remote MCP server called “Fetch,” which grabs web content and converts it to markdown for LLMs.

Our task is to fetch recent AI research papers from arxiv.org/list/cs.AI/recent and display them in a markdown table. This demo is beginner-friendly, but advanced users will appreciate how it showcases MCP’s efficiency.

Prerequisites

Ensure you have Python and the OpenAI library installed (

pip install openai).You’ll need an OpenAI API key set as an environment variable (

OPENAI_API_KEY).If you’re using Jupyter, also install

ipythonfor displaying markdown (comes preinstalled on Colab).

Here’s the code:

from openai import OpenAI

from IPython.display import display, Markdown # Optional for Notebooks

client = OpenAI(api_key=OPENAI_API_KEY)

mcp_host_response = client.responses.create(

model="gpt-4.1",

tools=[

{

"type": "mcp",

"server_label": "fetch",

"server_url": "https://remote.mcpservers.org/fetch/mcp",

"require_approval": "never",

},

],

input="Fetch all the Papers from this URL https://arxiv.org/list/cs.AI/recent and format into a table structure with all relevant columns",

)

display(Markdown(mcp_host_response.output_text))What’s happening here?

The OpenAI client acts as both the MCP host and MCP client, connecting to the Fetch server at https://remote.mcpservers.org/fetch/mcp.

The server offers a “fetch” tool that takes:

URL(required),Plus optional parameters like

max_length(to limit output size) andstart_index(to read content in chunks).

When you run the code, the client discovers the tool, the model generates a tool call to fetch the arXiv page, and the server converts the HTML to markdown. The LLM then formats the result into a table:

This demo shows MCP’s strength: you didn’t write any web scraping code or handle HTML parsing—the MCP server did the heavy lifting. Beginners can tweak the input prompt to experiment, while advanced users might explore parameters like start_index for paginated fetching.

Be cautious, though: the Fetch server can access local IPs, so avoid sensitive URLs in production.

Wrapping Up

MCP is a game-changer for building GenAI apps, making it easier to connect models to real-world tools and data. By standardizing communication, it saves time, enhances security, and opens up a world of integrations—from Google Drive to Stripe payments.

This tutorial showed how a single API call can fetch and format web content, a task that would otherwise require custom code. In future articles, we’ll dive into building MCP clients and servers from scratch, but for now, experiment with this demo and share your results!

This is a great beginner's introduction to this topic. I've worked on custom MCP-based solutions in recent months and am very excited about its potential. This could be to Generative AI what the Internet was to computers!