Building GenAI Apps #7: Comprehensive Guide to LLM Function and Tool Calling with the OpenAI API

All you need to know about LLM JSON parsing, function calling, and tools (MCP, web search & more).

Welcome to the 7th installment of our "Building GenAI" series!

In this article, we’re diving into some of the most exciting and powerful features of the OpenAI API (structured output, function calling, and tool calling). These capabilities allow you to transform large language models (LLMs) from simple text generators into dynamic tools that can interact with the world—fetching data, performing tasks, and integrating with external systems. I will walk you through everything you need to know to start building your own powerful generative AI applications with these tools.

Say you ask an LLM, “What’s the weather like today?”… How would it answer this question accurately?

Tackling a task like this requires more than just basic knowledge of the weather or geography. To answer correctly, the AI would actually need to get your current latitude + longitude coordinates and fetch real-time weather data.

Or picture another scenario, you want to develop an AI that can scan thousands of research documents and extract key information from each paper to a table (such as a list of authors, abstract, themes, etc). These are the kind of complex problems that these features unlock, and by the end of this guide, you’ll have the knowledge to make it happen.

Why AI Apps Need LLM’s Tool & Function Calling

At their core, LLMs are masters of natural/human language. They are designed to write stories, answer questions, and even chat like a friend. But they’re limited to words.

If you ask an LLM to check the weather, it can’t peek outside or browse the internet on its own—it can only guess based on the data it’s been trained on, which might be outdated or incomplete. This is where tool and function calling come into play, acting like bridges between the AI’s language skills and the real world.

Function calling lets the AI describe a task—like “fetch the weather for New York”—in a structured way that your code(Python program function) can understand and execute, such as calling a weather API.

Tool calling goes even further, allowing the AI to use external or inbuilt helpers like web search or specialized servers to get information it wouldn’t otherwise have. Together, these features make generative AI (GenAI) apps more than just talkers—they become doers, capable of solving practical problems and delivering real-time, actionable results.

For example, think about building a travel assistant. Without these features, it might only recite generic travel tips. With function and tool calling, it could check flight prices, suggest hotels based on live availability, or even warn you about weather conditions at your destination—all by connecting to external systems. This is why these capabilities are essential for creating GenAI apps that feel truly intelligent and useful.

Prerequisites & Setup

You don’t need to be a coding expert to follow this tutorial, but a few basics will help.

If you’re comfortable with Python basics, great! If not, don’t worry—I’ll explain things step-by-step.

You’ll also need an OpenAI API key, which you can get by signing up at OpenAI.

Finally, a general idea of what JSON is (a way to organize data, like a digital filing system) will be handy, though we’ll cover that too.

For setup, install Python on your computer, and you’re good to go—no fancy equipment required!

Foundations of LLM Execution

Before we jump into the fun stuff, let’s take a quick look under the hood of LLMs.

An LLM is like a super-smart librarian who predicts the next word in a story based on everything it has read before. It doesn’t think in sentences, but in tokens—small chunks of text like words or punctuation (API usage bills are also calculated based on these tokens). For instance, “Hello world” might break into “Hello” and “world”.

The prompt is your instruction to the LLM. A good prompt might be, “Explain the weather in simple terms,” which requests a discussion about the weather, while keeping things clear and focused.

But since an LLM is all about words, it can’t do things like check the actual weather—it can only weave a tale from what it knows from historic data. That’s the foundation we’re building on.

Native vs. External Capabilities (Why LLMs Don’t Call Functions Natively)

LLMs are text wizards, not action heroes. They’re built to craft responses, not to perform tasks like dialing up a data analysis service or crunching numbers in a calculator. Let’s break down why they can’t “call functions” on their own.

First, their design is all about language. They identify patterns in text and use those to predict what to say next. Asking them to pick a function, determine its inputs, and execute it is like asking a poet to fix your car—it’s just not what they’re built for.

Second, they lack a means to extend beyond their text bubble. LLMs by design can’t talk directly to APIs (services on the internet), open files, or interact with the world. They might suggest, “Hey, call this weather API with these details,” but they can’t make the call themselves—your code has to do that.

They excel at explaining or imagining, but struggle with doing. That’s why we pair them with external tools and code—to handle the “doing” part while they handle the “thinking,” making a perfect team-up!

OpenAI’s Structured Output, Function-Calling & Tool APIs

Now, let’s get to the good stuff. We’ll explore three key features: structured output, function calling, and tool calling.

OpenAI’s Structured Output: openai.responses.parse for Consistent JSON Outputs

When building LLM apps, sometimes a simple chatty reply isn’t enough—you need data in a specific format, like a list or table, that your program can easily use. That’s where structured output comes in. It forces the LLM to give you answers in a consistent shape, like JSON.

How to Define Structured Outputs

You tell the LLM what shape you want using a schema—a blueprint for the output. There are two main ways to do this:

Structured output (via Pydantic): It defines a consistent structure for LLM outputs using the Pydantic library and enforces variable typing using Python’s programming rules.

JSON mode (via Schema definition): Works with the https://json-schema.org/ standard to define the structure of the JSON output, handing it like a detailed instruction manual.

Structured output is more precise—it ensures both valid JSON and the exact layout you requested.

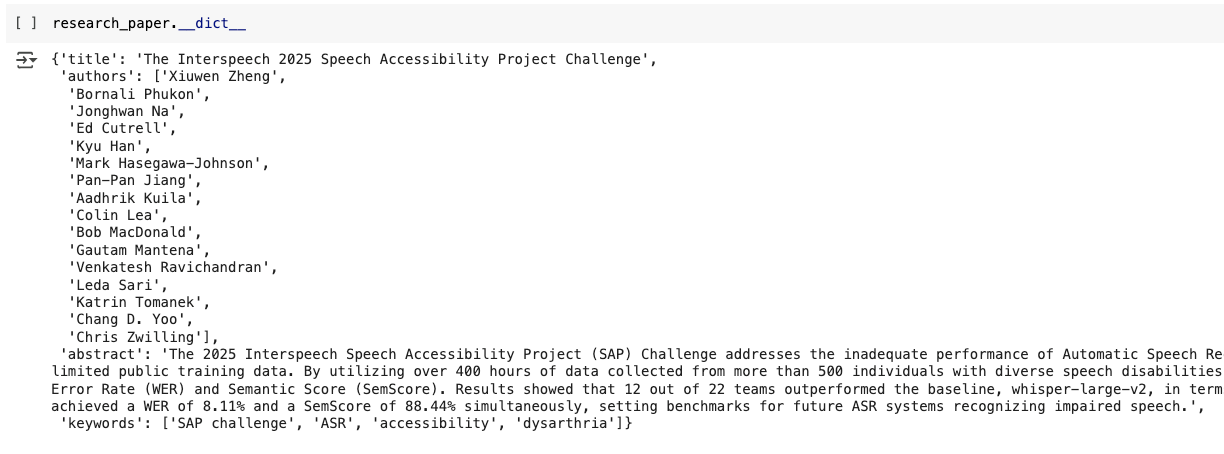

Example: Organizing Research Paper Info with Pydantic

You have been assigned to create a table summarizing the top 4,000 research articles from 2025. The table should include columns for paper title, authors, abstract, and keywords, all neatly organized. Here’s how you can do it in Python.

First, you install all required Python libraries:

pip install PyMuPDF openai pydanticExtract text from a PDF (say arxiv: The Interspeech 2025 Speech Accessibility Project Challenge):

import fitz

def extract_text_from_pdf(pdf_path):

"""Extracts text from a PDF file."""

text = ""

try:

with fitz.open(pdf_path) as doc:

for page in doc:

text += page.get_text()

except Exception as e:

print(f"Error extracting text from PDF: {e}")

text = None # Indicate failure

return text

pdf_text = extract_text_from_pdf('/content/2507.22047v1.pdf')

if pdf_text:

print("Successfully extracted text from PDF.")

else:

print("Failed to extract text from PDF.")Define the structure of output using Pydantic:

from openai import OpenAI

from pydantic import BaseModel

client = OpenAI(api_key="your-api-key") # Replace with your key

class ResearchPaperExtraction(BaseModel):

title: str

authors: list[str]

abstract: str

keywords: list[str]Then, extract content using OpenAI’s API:

response = client.responses.parse(

model="gpt-4o-2024-08-06",

input=[

{

"role": "system",

"content": "You’re an expert at organizing data. Take this research paper text and fit it into the structure I give you."

},

{"role": "user", "content": "Here’s the paper: [insert paper text]"}

],

text_format=ResearchPaperExtraction

)

research_paper = response.output_parsed

print(research_paper.__dict__)The AI reads the paper and hands back something like:

Example: Structured Output with JSON Schema

Here’s how to get a step-by-step math solution using JSON Schema definition:

response = client.responses.create(

model="gpt-4o-2024-08-06",

input=[

{"role": "system", "content": "You’re a math tutor. Solve this step-by-step."},

{"role": "user", "content": "How do I solve 8x + 7 = -23?"}

],

text={

"format": {

"type": "json_schema",

"name": "math_response",

"schema": {

"type": "object",

"properties": {

"steps": {

"type": "array",

"items": {

"type": "object",

"properties": {

"explanation": {"type": "string"},

"output": {"type": "string"}

},

"required": ["explanation", "output"]

}

},

"final_answer": {"type": "string"}

},

"required": ["steps", "final_answer"]

},

"strict": True

}

}

)

print(response.output_text)Best Practices

When working with structured outputs, remember a few key points. Mistakes can occur, so adjust your instructions or include examples if the output isn't correct. And if you’re coding, tools like Pydantic are better choices for enforcing consistent structured outputs, ensuring your schema and code stay aligned.

Function Calling: LLM-to-Code, OpenAPI-Style Definitions

Function calling enables the AI to suggest actions like “get the weather” or “perform X analysis and generate a graph” by providing a structured request and mapping outputs to Python functions that your code can execute. It’s like the AI saying, “I can’t do this, but here’s exactly what I’d do if I could.”

How to Define Functions

You define a function with a schema that includes:

Function name (e.g.,

get_weather)A description (what it does), and

Parameters (what it needs, like

latitudeandlongitude).

Here’s an example for fetching weather:

import requests

import json

def get_weather(latitude, longitude):

response = requests.get(f"https://api.open-meteo.com/v1/forecast?latitude={latitude}&longitude={longitude}¤t=temperature_2m")

return response.json()["current"]["temperature_2m"]

function_definition = [{

"type": "function",

"name": "get_weather",

"description": "Get the current temperature in Celsius for given coordinates.",

"parameters": {

"type": "object",

"properties": {

"latitude": {"type": "number"},

"longitude": {"type": "number"}

},

"required": ["latitude", "longitude"]

},

"strict": True

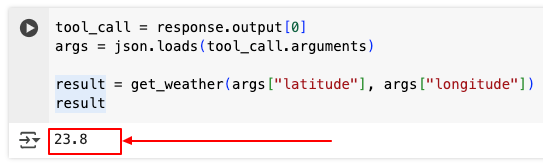

}]Now have the AI process the task:

response = client.responses.create(

model="gpt-4.1",

input=[{"role": "user", "content": "What’s the weather in Abuja today?"}],

tools=function_definition

)

tool_call = response.output[0]

args = json.loads(tool_call.arguments)

#execute the function

result = get_weather(args["latitude"], args["longitude"])

print(f"Temperature: {result}°C")The AI figures out Abuja’s coordinates (e.g., latitude 9.0765, longitude 7.3986) and gives your code the green light to fetch the temperature. You run the function, and voilà—real-time weather!

Best Practices

Make your function names and descriptions crystal clear—like “get_weather” instead of “weather_stuff”—so the AI knows when to use them. Add helpful system prompts like “Use get_weather for weather questions” to guide it.

If it messes up, throw in examples to finetune responses (e.g., “For ‘weather in London,’ use get_weather with lat 51.5074, lon -0.1278”).

Keep functions simple and few—under 20 is a good rule—to avoid confusion.

Tool Calling: MCP, Web Search, etc.

OpenAI’s Tool calling feature gives the AI a box of powerful tools for advanced task execution. Beyond its map custom code functions, it can utilize built-in tools for web search or remote servers to retrieve information it lacks.

Exploring Tools

OpenAI offers several tools:

Web Search: Fetches fresh info from the internet.

Remote MCP Servers: Connects to specialized servers for extra capabilities via the Model Context Protocol (MCP).

Code Interpreter: Allows the model to execute code in a secure container..

Computer use: Creates agentic workflows that enable an AI model to control a computer interface.

File search: Searches the contents of uploaded files for context when generating a response.

Image generation: Generates and edits images using GPT Image.

In future articles, we will explore in depth how to best use these advanced tools.

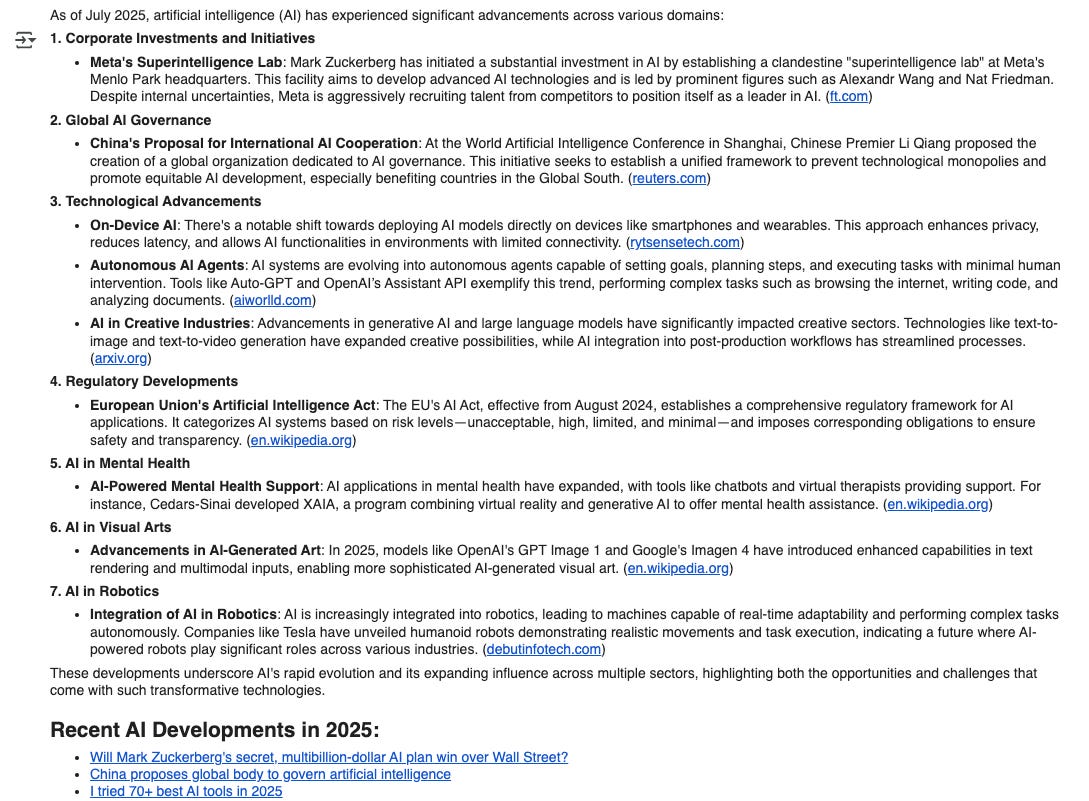

Example: Web Search

Ask, “What’s new in AI in 2025?”:

response = client.responses.create(

model="gpt-4.1",

tools=[{"type": "web_search"}],

input="What are the latest developments in AI as of 2025?"

)

print(response.output_text)The AI searches the web and weaves the latest news into its answer—way better than guessing from old data!

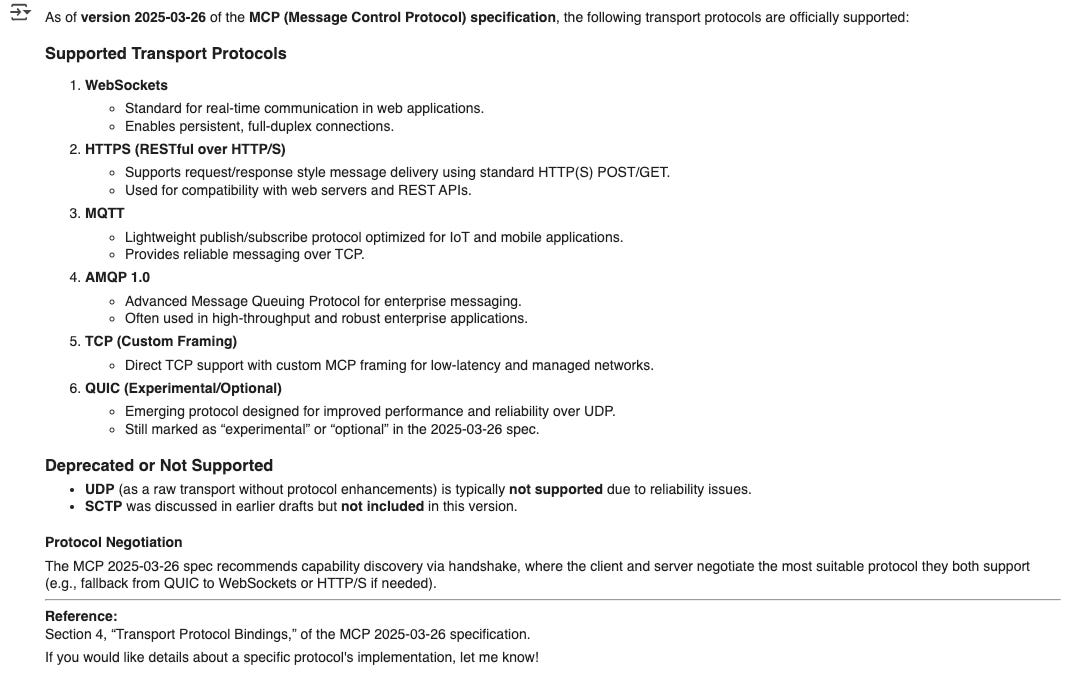

Example: MCP Tool

For something niche, like “What transport protocols are supported in the 2025-03-26 version of the MCP spec?”:

response = client.responses.create(

model="gpt-4.1",

tools=[{

"type": "mcp",

"server_label": "deepwiki",

"server_url": "https://mcp.deepwiki.com/mcp",

"require_approval": "never"

}],

input="What transport protocols are supported in the 2025-03-26 version of the MCP spec?"

)

print(response.output_text)The AI queries the MCP server and returns a detailed answer, extending its knowledge beyond its training.

Conclusion

You’ve just unlocked the secrets of OpenAI’s structured output, function calling, and tool calling! These features allow you to build GenAI apps that don’t just talk—they act, connect, and solve real problems. Whether you’re organizing data, fetching weather updates, performing analysis, or tapping into live information, you’re now ready to make it happen.

Want more? Check out the OpenAI Cookbook for more practical and advanced examples, the API docs, or explore fine-tuning for function calling to improve accuracy. The Prompt Engineering Guide is also invaluable for creating better instructions.

In future articles, we’ll explore MCP and other tools in more depth, showing you how to elevate your apps even further. Stay tuned and keep experimenting—your next big AI idea is waiting!