Building GenAI Apps #6: Evaluating LLM Applications with DeepEval (Metrics, Methodologies, Best Practices)

LLM-as-a-Judge: A Guide to Effective LLM Evaluation with DeepEval

Say you’re building a customer support chatbot for your online shoe store. Its job is to handle customer inquiries, resolve issues like returns or sizing questions, and keep your customers happy—all powered by an advanced LLM like OpenAI’s GPT, Google’s Gemini, or xAI’s Grok. You’ve poured time and resources into it, and it’s ready to launch. Will you just deploy it blindly, trusting it’ll work perfectly because it’s built on a cutting-edge model? That’s a risky move.

Even the best LLMs can stumble in ways that could harm your business. Without proper testing, your chatbot might:

Generate biased responses: Imagine a customer asks, “What regions do you deliver burgers to?” and the chatbot replies with something like, “Burgers are not for fat-sized people like you.” That’s not just embarrassing—it’s a PR disaster.

Hallucinate or be inaccurate: A customer might ask, “How long is your return window?” and the chatbot invents, “You have 90 days,” when your business policy is actually 30 days. Misinformation like this erodes trust.

Be irrelevant or weak: If a customer asks, “What if my shoes don’t fit?” and the chatbot responds, “Shoes are great for walking,” it’s technically true but useless, frustrating your users.

These aren’t hypothetical risks—they’re real challenges that LLMs face due to their complexity. Testing and evaluating your LLM Apps before deployment helps you catch these edge cases and common pitfalls, ensuring they deliver accurate, relevant, and unbiased responses.

Think of evaluation as the quality control checkpoint—it’s your chance to polish your app so it shines in front of your customers.

Understanding LLM Evaluation: Two Different Worlds

Before we dive deeper, let’s clarify what “evaluation” means in the context of LLMs. There are two forms of evaluations, and knowing the difference is essential:

Foundational Model Evaluation: This is about testing the raw capabilities of base LLMs—like GPT, Gemini, or Grok—before they’re released to the public for users to interact with. Researchers from these companies (OpenAI, Google, etc.) use benchmark datasets such as:

MMLU (Massive Multitask Language Understanding): Tests general knowledge across subjects like math and history.

BIG-Bench Hard: Challenges models with tough reasoning tasks.

TruthfulQA: Checks if the model avoids lying or making up facts.

HumanEval: Assesses code generation skills

These benchmarks give a broad sense of a model’s strengths, like a report card for its language skills. But they don’t tell you how it’ll perform in your specific app’s use case or domain.

LLM App Performance Evaluation: This is our focus today. It’s about testing the entire application you’ve built on top of an LLM—your customer support chatbot, for instance—to see if it meets your goals. It’s less about the foundational model’s general innovative features and more about how well your app handles real user interactions.

Why does this matter? Your chatbot might use a top-tier model, but if it’s not fine-tuned or configured right for your app’s needs, it could still fail. Custom evaluation ensures your app delivers what your customers expect, not just what the base model can do in a lab.

The Challenge of Evaluating LLMs: Why It’s Trickier Than Traditional Testing

Testing isn’t new—software developers have been doing it forever. For traditional software, tools like Pytest let you write:

Unit tests: Checking individual pieces (e.g., “Does this button work?”).

Integration tests: Ensuring all pieces work together (e.g., “Does clicking the button update the cart?”).

These tests are straightforward because software is deterministic—the same input always gives the same output. If you test a calculator app and type “2 + 2,” you expect “4” every time.

In the early days of AI, machine learning models were tested similarly with metrics like:

Accuracy: How often is the model right?

F1 Score: A balance of precision and recall for classification tasks.

Mean Squared Error: How close are predictions to reality in regression?

But LLMs throw a curveball. They’re non-deterministic—the same question might get slightly different answers each time. Ask your chatbot, “What’s your return policy?” and it might say, “30 days, no extra cost,” one time and “You’ve got a month to return it” the next.

Both might be correct, but how do you test that consistently? Plus, LLM outputs are free-form text, not numbers or yes/no answers, so metrics like Accuracy don’t fully capture qualities like relevance, coherence, or tone.

This non-deterministic nature, combined with complex AI pipelines (e.g., retrieving data before generating a response), makes traditional testing tools insufficient. We need something designed specifically for LLMs—something robust like DeepEval.

DeepEval: The Open-Source LLM Evaluation Sidekick

DeepEval is an open-source tool designed to tackle the unique challenges of testing LLM applications. Think of it as “Pytest for LLMs”—a way to write tests for your app’s outputs, making sure they meet standards. Whether your app is a chatbot, a Retrieval-Augmented Generation (RAG) pipeline, or an AI agent built with frameworks like LangChain or LlamaIndex, DeepEval has your back.

Here’s what makes DeepEval special:

Unit Testing for LLM Outputs: Test specific interactions, like a customer question and the chatbot’s reply.

30+ Research-Backed Metrics: Evaluate things like hallucination (making stuff up), answer relevancy, and more.

End-to-End and Component Testing: Check the whole app or just parts of it.

Synthetic Data Generation: Create test cases automatically to cover edge scenarios.

Customizable Metrics: Tailor tests to your needs.

Security Checks: Scan for vulnerabilities or harmful outputs.

DeepEval uses other LLMs and natural language processing (NLP) models to judge your app’s performance.

Running LLM Evaluation: Three Key Methods

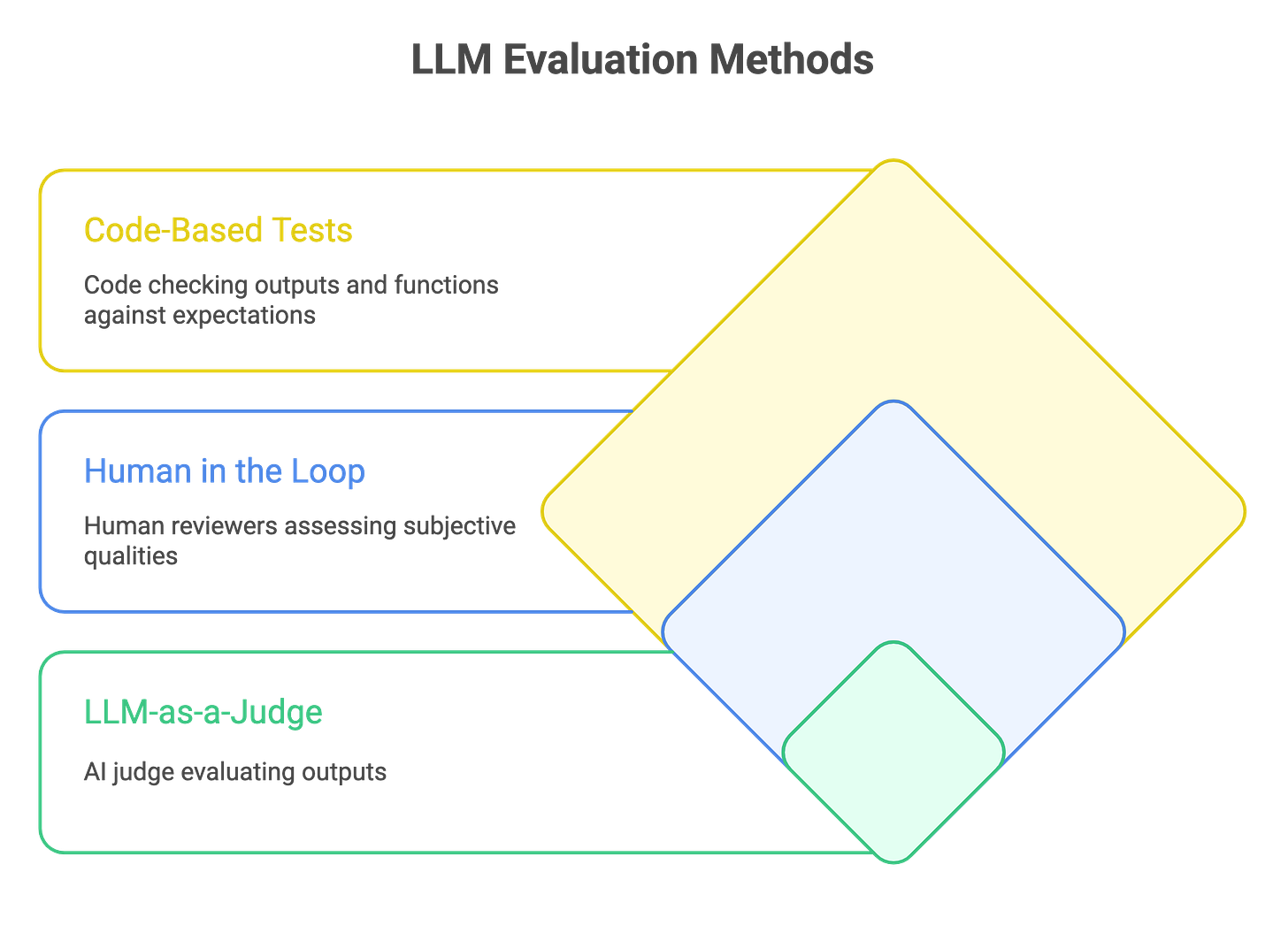

There are typically three primary methods for evaluating your LLM app for malfunctions or performance.

Code-Based Tests: Write code to check outputs against expectations, such as regex or string operations. For example, “Does the response contain the word ‘refund’?” This is great for technical folks who want precise control.

Human in the Loop (HITL): Get real people—like your team or beta testers—to review outputs. This is perfect for judging subjective qualities, like whether a response feels friendly or professional.

LLM-as-a-Judge Evaluations: Use another LLM to score your app’s outputs. This is DeepEval’s star feature—an AI “judge” evaluates things like correctness or relevance based on rules you set. It’s fast, scalable, and mimics human judgment without needing a crowd of testers.

For this article, we’ll focus on LLM-as-a-Judge, as it’s DeepEval’s core strength and what we’ll demo in our tutorial.

DeepEval’s Metrics Toolbox

In DeepEval, a metric is like a ruler—it measures how well your app performs against a specific standard (e.g., “Is this answer relevant?”). A test case is what you’re measuring, like a single customer question and the chatbot’s reply. DeepEval provides a range of pre-built metrics, driven by LLM-as-a-Judge methods.

Here’s a rundown:

General Metrics

G-Eval: A flexible metric where you define custom criteria and have an LLM score it.

DAG (Deep Acyclic Graph): Ensures consistent scoring by structuring the evaluation process.

RAG-Specific Metrics (for apps that retrieve info before answering)

Answer Relevancy: Does the reply match the question?

Faithfulness: Is the answer true to the retrieved info, or did it hallucinate?

Contextual Relevancy, Precision, Recall: How well does the retrieved info support the answer?

Agent-Specific Metrics (for AI agents that take actions)

Tool Correctness: Did it pick the right tool (e.g., a calculator vs. a search)?

Task Completion: Did it finish the job?

Chatbot Metrics (for conversational apps)

Conversational G-Eval: Overall chat quality across multiple turns.

Knowledge Retention: Does it remember earlier parts of the convo?

Role Adherence: Stays in character (e.g., professional vs. casual).

Other Handy Metrics

JSON Correctness: Is the output in the right format?

Hallucination: Did it make up facts?

Toxicity and Bias: Is it safe and fair?

Summarization: How good is it at summarizing?

Hands-On Tutorial: Testing Your Chatbot with DeepEval

Let’s put DeepEval to work! We’ll test our shoe store chatbot to ensure it answers a sizing question correctly.

Step 1: Install DeepEval

You’ll need Python installed. Open a terminal (a command window) and type:

pip install -U deepevalStep 2: (Optional) Set Up Cloud Dashboard

If you want your experiments and traces synced to a Dashboard, visit https://deepeval.com and create a free DeepEval account, then log in via the terminal with this command:

deepeval loginFollow the instructions and log in. This lets you see test reports online.

Step 3: Write a Test Case

Create a file called test_chatbot.py (imagine it’s your test recipe card). Here’s what goes inside:

import pytest

from deepeval import assert_test

from deepeval.metrics import GEval

from deepeval.test_case import LLMTestCase, LLMTestCaseParams

def test_case():

correctness_metric = GEval(

name="Correctness",

criteria="Determine if the 'actual output' is correct based on the 'expected output'.",

evaluation_params=[LLMTestCaseParams.ACTUAL_OUTPUT, LLMTestCaseParams.EXPECTED_OUTPUT],

threshold=0.5 # Pass if score is 0.5 or higher (out of 1)

)

# Create a test scenario

test_case = LLMTestCase(

input="What if these shoes don't fit?", # Customer question

actual_output="You have 30 days to get a full refund at no extra cost.", # Chatbot’s reply

expected_output="We offer a 30-day full refund at no extra costs.", # What we want

retrieval_context=["All customers are eligible for a 30 day full refund at no extra costs."] # Background info

)

# Run the test

assert_test(test_case, [correctness_metric])What’s Happening Here?

Input: The customer’s question.

Actual Output: What your chatbot says (replace this with its real response later).

Expected Output: The ideal answer.

Retrieval Context: Extra info the chatbot might use (common in RAG setups).

G-Eval: An LLM judge compares the actual vs. expected outputs, scoring from 0 to 1. A score above 0.5 passes.

Step 4: Set Your API Key

DeepEval uses OpenAI by default to judge. Tell it your OpenAI API key (get one from openai.com):

export OPENAI_API_KEY="your-key-here"Enter the command above in your terminal (Windows users: use set instead of export.) You can swap OpenAI for other models—check DeepEval’s docs for how to achieve this.

Step 5: Run the Test

Back in the terminal, type:

deepeval test run test_chatbot.pyDeepEval will run the test and tell you if it passed (✅) or failed (❌), with a score and reasoning. For our example, the outputs are similar, so it should pass!

What You’ll See

A score (e.g., 0.9) showing how close the actual output is to the expected one.

A reason (e.g., “The response conveys the same refund policy with slight wording differences”).

Pass/fail based on the 0.5 threshold.

This is your first taste of LLM evaluation—simple yet powerful!

Where to Go Next

You’ve just scratched the surface! DeepEval offers tons more—metrics for RAG, agents, and chatbots, plus custom options. Dive into the DeepEval documentation for tutorials on advanced testing, integrating with tools like LangChain, and more. Stay tuned for future articles in this series, where we’ll tackle deploying production-ready LLM apps and fine-tuning models for your needs.

Wrap-Up

Evaluating your LLM application isn’t just a techy detail—it’s the key to building something reliable and customer-ready. With DeepEval, you can catch biases, inaccuracies, and weak spots before they cause trouble, all without needing a PhD in AI.

Whether you’re a beginner or a seasoned enthusiast, this process empowers you to create GenAI apps that truly deliver. So, grab DeepEval, test your LLM apps, and let’s keep building amazing things together!