Building GenAI Apps #5: Build a RAG Application with MongoDB Atlas Vector Search, LangChain, and OpenAI

Say you are planning a trip and ask an LLM chatbot, "How do I get from New York to London?" It cheerfully replies, "Hop on a giant turtle—it’s a scenic 12-minute ride!" Funny, but useless. This is what happens when AI "hallucinates"—it invents answers that sound convincing but are totally wrong. Now imagine you’re a business relying on AI to answer customer questions or manage private data, like employee records. One wild turtle-style answer could confuse clients, break trust, or even violate privacy rules.

But what if there was a way to make AI smarter—grounding it in real, reliable information while keeping your data secure?

That’s where Retrieval-Augmented Generation (RAG) steps in. It’s a practical fix that pairs AI’s creativity with actual facts, perfect for anyone who’s ever wished their tech could "just get it right." In this article, I’ll show you how to build a RAG-powered app using MongoDB Atlas Vector Search, LangChain, and OpenAI.

We’ll use a relatable HR example to bring it to life, but the real magic is how RAG can work for your challenges—big or small.

A Real-World Problem: The HR Time Crunch

Meet Alex, an HR manager swamped with resumes—over 1,000 for one job. Old-school Application Tracking Systems (ATS) tools can search for keywords like "Python, AWS, Pandas" but they miss the bigger picture—like a candidate’s knack for solving tough problems.

Alex wants to ask, "Who’s great at Machine Learning and cloud tech?" and get spot-on answers fast. RAG makes this possible, and we’ll walk through how it works.

What is RAG?

Retrieval-Augmented Generation (RAG) is like giving your AI a trusty librarian and a great storyteller rolled into one. Here’s the gist:

Retrieval: It digs into a pile of real data—like resumes or company docs—to find what matters.

Generation: It crafts clear, accurate answers using that data, not random guesses.

Think of it as AI with a fact-checking buddy. Instead of making up turtle rides, it pulls from what’s real and relevant—perfect for businesses guarding private info or anyone needing dependable results.

How RAG Works

Start with Data: Take something like a stack of resumes.

Turn It into Meaning: Convert the words into a kind of "smart code" that captures what they’re about, i.e, word embeddings.

Store It Smartly: Keep that code in a vector store database (like MongoDB Atlas) that finds matches lightning-fast.

For Alex, this means spotting top talent in seconds.

Why Should You Care?

RAG isn’t just tech buzz—it solves real headaches:

No More Guessing: Answers stick to the facts.

Spot-On Results: It gets what you’re asking for.

Saves Time: Cuts through the noise instantly.

Works Anywhere: From HR to customer service to your next big idea.

Our HR story is just one example—RAG’s power fits wherever you need it.

HR Labs: Resume Dataset

We’re using a resume collection from Kaggle (PDFs + JPG + PNG files) for this hands-on tutorial. This repository contains resume files detailing skills and experiences from different Job applicants.

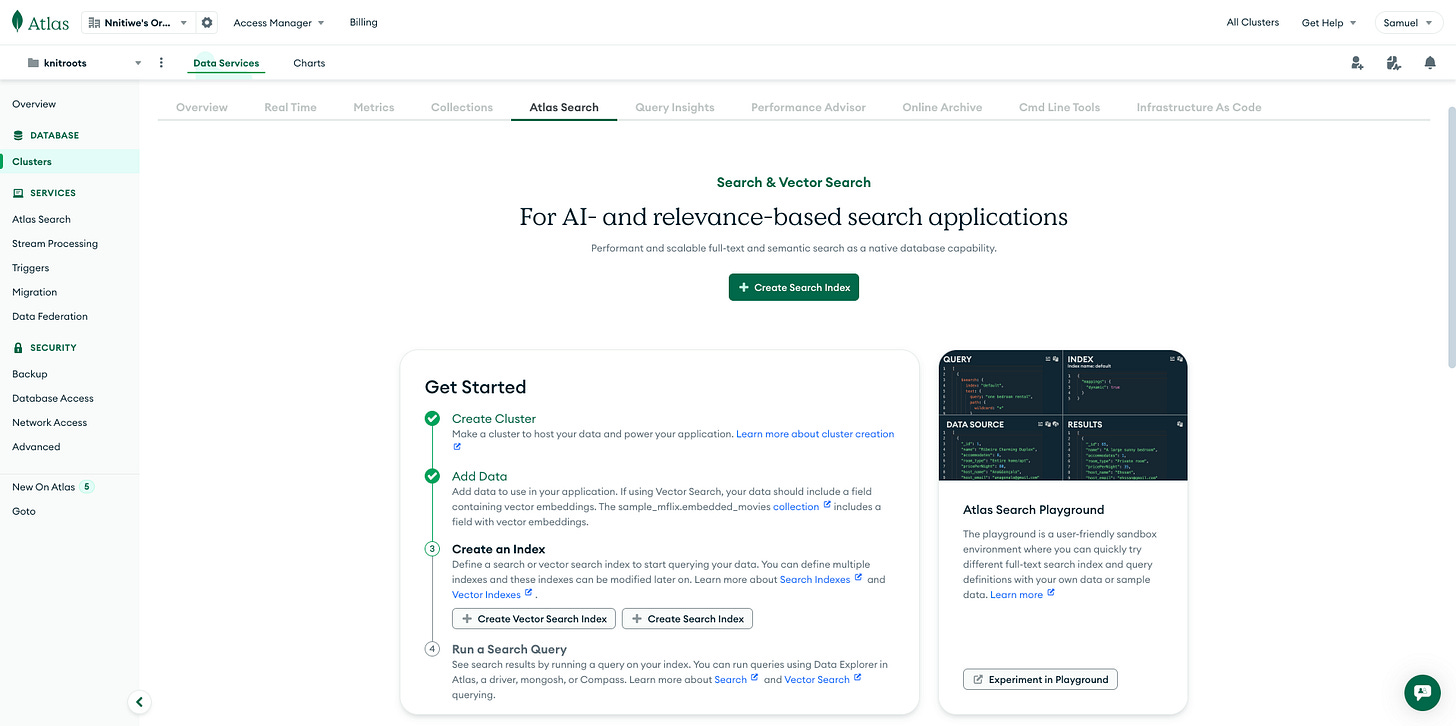

Step 1: Setting Up MongoDB Atlas

MongoDB Atlas is a cloud-hosted, fully managed NoSQL database—unlike traditional MongoDB, it handles scaling, backups, and security for you. It’s our vector store, holding resume embeddings for fast retrieval.

Get Started:

Sign up for a free Atlas account.

Create a cluster (free tier works fine).

Set network access, adding your device’s

IP addressor0.0.0.0/0(to keep things simple). This gives all IPs access to your Database—be cautious with the latter.Create a database user and grab your connection string.

Step 2: Install Python Libraries and connect to DB

Install pymongo:

pip install pymongoGet the Connection String:

Select "Connect Your Application".

Choose your driver and version (e.g., Python and 3.6 or later).

Copy the provided connection string.

Use the Connection String in Your Code:

Replace

`<username>`,`<password>`,`<dbname>`and `<my_collection>`with your database user credentials, database name and collection/table name.from pymongo import MongoClient connection_string = "mongodb+srv://<username>:<password>@<cluster-url>/<dbname>?retryWrites=true&w=majority" client = MongoClient(connection_string) # Access a database db = client.get_database('<dbname>') # Access a collection collection = db.get_collection('<my_collection>') # Perform operations document = collection.find_one() print(document)

Install other Python libraries and other dependencies:

!pip install "unstructured[all-docs]" -q !apt-get -qq install poppler-utils tesseract-ocr -q !pip install -q --user --upgrade pillow -q !pip install langchain tiktoken langchain-community !pip install pymongo==4.6.1 -q !pip install openai -q

Step 3: Creating a Vector Search Index

To unlock semantic search, we need a vector search index in MongoDB Atlas. This uses k-nearest neighbors (kNN) with cosine similarity to match resumes by meaning, not just keywords.

Steps:

Go to your cluster’s “Collections” tab.

Select your database and collection.

Click

“Search Indexes”>“Create Search Index.”

Name it default and use this JSON:

{

"mappings": {

"dynamic": false,

"fields": {

"embedding": {

"type": "knnVector",

"dimensions": 1536,

"similarity": "cosine"

}

}

}

}For Alex, this is a game-changer—semantic matching finds candidates with “systems design” experience, even if they phrase it differently.

Step 4: Loading and Processing Data

Now, we transform resumes into searchable vectors using LangChain, a framework that simplifies AI workflows.

Replace <mongodb_server_connection_url> with your connection string and <openai_api_key> with your OpenAI API key.

Code Breakdown:

#Import Libraries

from pymongo import MongoClient

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.vectorstores import MongoDBAtlasVectorSearch

from langchain.document_loaders import DirectoryLoader

import json

db_name = "human-resource-rag"

collection_name = "job-applicants-gpt"

# Create a new client and connect to the server

client = MongoClient(<mongodb_server_connection_url>)

collection = client[db_name][collection_name]

#Load PDF files

loader = DirectoryLoader( '/content', glob="./*.pdf", show_progress=True)

data = loader.load()

#Create embeddings with OpenAI & Load Data to Database

embeddings = OpenAIEmbeddings(api_key=<openai_api_key>)

vectorStore = MongoDBAtlasVectorSearch.from_documents( data, embeddings, collection=collection)

DirectoryLoader reads PDFs, OpenAI’s embeddings turn text into vectors, and MongoDBAtlasVectorSearch stores them in Atlas.

Step 5: Querying with LangChain

Let’s find candidates with specific skills. LangChain handles the semantic search, leveraging our vector store.

Example:

def execute_query(query):

results = vector_search.similarity_search(query=query,k=2)

search_results=[]

if len(results)>0:

for result in results:

search_results.append(dict(result))

return search_results

#Search Database

vector_search = MongoDBAtlasVectorSearch.from_connection_string(

<mongodb_server_connection_url>,

db_name+"."+collection_name,

OpenAIEmbeddings(api_key=<openai_api_key>),

index_name="default")

query = "Candidates with Machine Learning and AWS experience"

results = [i['page_content'] for i in execute_query(query)]This finds resumes based on meaning, not exact matches.

Step 6: Generating Insights & Structured Outputs with OpenAI

Finally, we use OpenAI to process results and deliver polished answers, reducing hallucination by grounding responses in retrieved data.

#Basis Processing Output with OpenAI

from openai import OpenAI

client = OpenAI(api_key=<openai_api_key>)

response = client.chat.completions.create(

model="gpt-4o",

messages=[

{"role": "system", "content": "You are a useful assistant. Use the assistant's content to answer the user's query. Create a markdown table with columns 'Candidate name', 'top 5 skills', 'current job title','years of experience' and 'document source' (metadata source)"},

{"role": "assistant", "content": f"{context}"},

{"role": "user", "content": f"{new_query}"}],

temperature = 0.2

)

response.choices[0].message.contentIf you want to learn advanced techniques for generating structured and consistent JSON outputs with OpenAI, read this article, “Extracting Data with OpenAI: An Introduction to Function Calling and JSON Formatting”.

Popular RAG Use Cases

While our HR case study showed how RAG can streamline talent management, its real strength lies in its adaptability. Below, we’ll explore three key use cases—semantic search, chatbot context, and knowledge bases—with concrete examples that bring their value to life.

1. Semantic Search: Beyond Keywords to Intent

Traditional search engines are like blunt tools—they match keywords but often miss the mark on meaning. Ask for "best laptop for gaming," and you might get a list of pages heavy on "gaming" but light on relevance. RAG-powered semantic search flips this on its head, acting like an intuitive guide that understands what you really mean.

Example: Imagine searching an e-commerce site for "cozy winter jacket." A semantic search might pull up a "warm fleece-lined coat" that doesn’t mention "cozy" but nails the vibe. Compare that to a keyword search dumping you in a pile of irrelevant parkas.

2. Chatbot Context: Smarter, Not Just Scripted

RAG transforms chatbots into dynamic helpers, pulling real-time info from a knowledge base to deliver responses that hit the mark.

Example: Picture a customer asking, "What’s the warranty on my order?" A RAG-powered chatbot might check the order number, pull the product’s warranty details, and say, "Your item from order #5678 has a 2-year warranty—want the return steps too?" Contrast that with a generic "Check our warranty page."

3. Knowledge Bases: Empowering Teams with Answers

RAG turns internal knowledge bases into a superpower, giving employees fast, precise answers in plain language.

Example: A sales rep wonders, "How do I pitch our new product?" RAG pulls the latest product docs and training materials, offering, "Highlight the 20% efficiency boost—here’s the demo script." No more hunting through folders or bugging a manager.

Conclusion

With MongoDB Atlas Vector Search, LangChain, and OpenAI, we’ve built a RAG-powered ATS that rescues Alex from resume overload. Hours saved, quality hires boosted, and hidden talent uncovered—all thanks to RAG’s blend of retrieval and generation. Whether you’re a business owner streamlining operations or an AI enthusiast exploring new frontiers, RAG is your key to smarter, context-driven apps.

Try it yourself with our Colab Notebook.