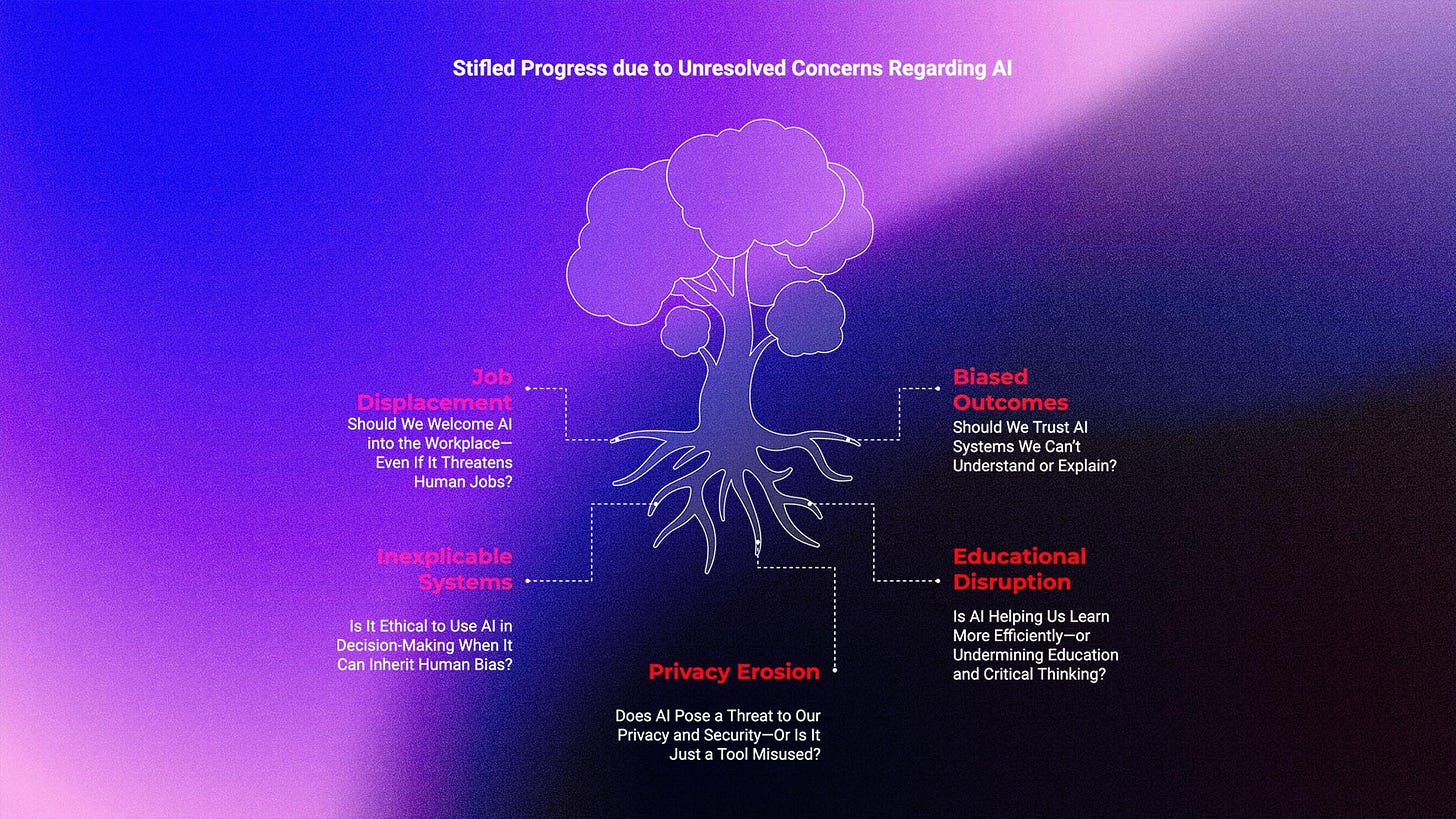

The Great AI Debate: Are We Pioneering Progress or Just Playing with Fire?

Uncensored Perspectives on AI’s Potential, Risks, and the Road Ahead

Let’s set the scene.

AI is not creeping in—it’s sprinting. In hospitals, algorithms spot tumors faster than seasoned radiologists. On Wall Street, they predict market swings with eerie precision. Even in your living room, AI-powered assistants like Alexa know your pizza order before you do.

The stats are staggering: according to Precedence Research, the global AI market size could hit $3,680.47 billion by 2034, expanding at a CAGR of 19.20% from 2025 to 2034. The AI Optimist sees a utopia—a world where grunt work vanishes, and humans focus on big ideas. But the AI Skeptic isn’t buying the hype.

There is a heavy debate about AI's darker sides: photographers losing gigs to AI art generators, truck drivers sidelined by self-driving rigs, and a growing unease about who—or what—controls the reins. Real-world stumbles, like biased hiring tools or deepfake scandals, only deepen the divide.

This is not just a tech story—it’s a human one too, and my goal is to cut through the noise with a debate that informs and prepares you to wrestle with AI’s double-edged sword.

The Debate: AI Optimists vs. AI Skeptics

1. Should We Welcome AI into the Workplace—Even If It Threatens Human Jobs?

AI Skeptic:

Let’s get real. AI is not just nibbling at jobs—it is swallowing them whole. Take photography: Tools like Midjourney churn out stunning images from a single prompt, leaving pros scrambling. Voice-over artists find their cloned voices narrating ads they never signed up for—AI can mimic Meryl Streep in minutes. Coders?

GitHub Copilot spits out functional scripts faster than you can say “bug fix.” This is not a gentle nudge toward efficiency; it is a tidal wave threatening livelihoods. Why cheer for a tech that could render millions obsolete?

AI Optimist:

Easy there—AI’s not the villain you’re painting. It is a partner, not a replacement. Sure, it can generate a photo, but it lacks the human eye that catches the perfect sunset glow. Voice clones? They’re slick but don’t feel the script’s soul like a seasoned actor does. And coding? AI drafts boilerplate, but developers still debug, optimize, and dream up the architecture.

Look at history: the Industrial Revolution didn’t end work—it birthed new roles. McKinsey’s 2030 forecast agrees—AI could create 20 million jobs globally, from data trainers to AI Skeptics, even as it displaces others. Companies like Amazon are already reskilling workers, turning warehouse staff into robotics techs. It’s not extinction; it’s evolution.

AI Skeptic:

Evolution’s a rosy word for those with cushy degrees. What about the cashier replaced by a kiosk or the trucker outpaced by Tesla’s semi? Retraining sounds great, but it’s slow, costly, and not everyone’s cut out for “AI supervisor” gigs. The gap between promise and reality leaves people stranded.

AI Optimist:

Fair point—transitions suck. But stalling AI won’t rewind the clock; it’ll just cede the edge to competitors. The fix isn’t resistance—it’s preparation. Governments can fund retraining, and Businesses can pivot, too—Walmart’s pairing AI with human cashiers, not axing them. AI doesn’t steal jobs; it reshuffles them. Humans stay in the driver’s seat.

The job quake is real, but it’s not Armageddon. For workers, lean into AI—use it to sharpen your skills, not sideline them. Businesses, don’t just automate; upskill your crew. Policymakers, build bridges—think subsidies or job transition hubs. AI’s a force multiplier, not a firing squad—steer it right, and we all win.

2. Is It Ethical to Use AI in Decision-Making When It Can Inherit Human Bias?

AI Skeptic:

Here’s the rub: AI isn’t neutral—it’s a sponge for our flaws. Feed it biased data, and it spits out biased calls. Take hiring: Amazon’s AI tool in 2018 trashed women’s resumes because it learned from a decade of male-heavy hires. Or policing—the COMPAS algorithm flagged Black defendants as riskier, amplifying racial skews. This isn’t abstract; it’s justice on the line. How can we lean on AI when it parrots our prejudices?

AI Optimist:

Bias is a glitch, not a death sentence. We’re not helpless—tools like Google’s What-If analyze datasets for skew, while IBM’s AI Fairness 360 sniffs out discrimination. The trick? Diverse inputs and constant audits. Humans aren’t saints either—studies show judges favor harsher sentences before lunch. AI, done right, can outstrip us by standardizing fairness.

The EU AI Act has our back, mandating transparency for high-stakes systems. Picture this: AI screens job applicants, and humans interview them. It’s a tag team, not a takeover.

AI Skeptic:

Audits are cool, but AI still misses the human spark—grit, context, that gut call. A woman’s resume might lack buzzwords but scream potential. AI wouldn’t see it. And in policing, numbers don’t capture a kid’s backstory. It’s cold math masquerading as justice.

AI Optimist:

Cold math sometimes beats hot bias. AI flags patterns—like recidivism risks—humans add the soul. In hiring, it narrows the pool; recruiters pick the gems. It’s not flawless, but neither are we. The key? Oversight. DARPA’s Explainable AI program is cracking the code, making AI’s logic legible. We’re not dumping decisions on machines; we’re arming ourselves with sharper tools.

Bias isn’t AI’s Achilles’ heel—it’s ours. Users should not blindly trust AI outputs; question them. Developers, integrate fairness—diverse data, regular checks human vetoes. Regulators should enforce clarity, like the EU’s risk tiers. AI can amplify justice, but only if we watchdog it.

3. Should We Trust AI Systems We Can’t Understand or Explain?

AI Skeptic:

Imagine this: an AI green-lights a cancer treatment, but no one knows why. Or it denies your loan, and the bank shrugs—“The algorithm said so.” These “black boxes” are Russian roulette with stakes. In healthcare, finance, and law, opacity breeds distrust. Shouldn’t we demand a peek under the hood?

AI Optimist:

We’re peeling back the curtain—slowly. Explainable AI (XAI) is the buzzword, with tools like LIME tracing decisions back to inputs. AI might flag a tumor in healthcare, but doctors confirm it with scans. The EU AI Act demands this for high-risk stuff—think parole or credit scoring. Low-risk? A chatbot picking your Netflix queue doesn’t need a dissertation.

Complexity is the trade-off: Deep learning’s power comes from layers we can’t always untangle. However, trust is not blind—pilots operate planes without constructing engines. We confirm results, not designs.

AI Skeptic:

Verification is shaky when stakes skyrocket. Patients want to know why, not just what. And lazy users might rubber-stamp AI, assuming it’s gospel because it’s “smart.” That’s a disaster brewing.

AI Optimist:

That’s a people problem, not a tech one. Education’s the antidote—teach skepticism. In finance, analysts cross-check AI’s market bets. DARPA’s XAI push shows promise—think heatmaps highlighting why a model picked “yes.” Trust isn’t about total clarity; it’s about being enough to act. We’re getting there.

Opaque AI isn’t a dealbreaker; it’s a challenge. Users should push for explanations in big calls. Developers ought to lean on XAI and test rigorously. Regulators need to tier the rules—high risk, high transparency. Trust grows from scrutiny, not magic.

4. Is AI Helping Us Learn More Efficiently—or Undermining Education and Critical Thinking?

AI Skeptic:

Education stands on a tightrope. Kids tap ChatGPT for essays, dodging the grind of reasoning. Teachers catch plagiarized brilliance; parents fret over lazy teens; bosses lament grads who can’t think without a bot.

AI is slick, but it hollows out the skills that matter. Are we breeding thinkers or button-pushers?

AI Optimist:

AI is a turbocharger, not a shortcut. It personalizes learning—Khan Academy’s AI tutors slow down for strugglers and speed up for whizzes. It grades stacks of papers, allowing teachers to coach instead of just mark. In research, it’s a beast—sifting through data that would take humans years.

The snag? Cheating. But that’s not AI’s sin—it’s like blaming a pencil for a forged note. Schools can flip it: use AI as a brainstorming buddy, not a ghostwriter. Stanford is testing this—students prompt AI, then critique its output. It’s learning with a twist.

AI Skeptic:

Twist or not, classrooms aren’t prepared. Teachers are outpaced by tech-savvy kids, and rural schools lack the equipment. An over-reliance looms—kids might lean on AI like a crutch, not a ladder.

AI Optimist:

Then rewrite the rules. Teach AI literacy—how to prompt, probe, and outthink it. Finland’s schools do this, blending tech with problem-solving. It’s not about gear; it’s mindset. AI’s a co-pilot—guide it, don’t nap in the back seat.

AI can ignite learning, not snuff it out. Educators, wield it to spark curiosity, not to shortcut it. Students, treat it as a sparring partner — challenge it. The edge? It’s in how we mold it, not just in how it molds us.

5. Does AI Pose a Threat to Our Privacy and Security—Or Is It Just a Tool Misused?

AI Skeptic:

Privacy is on life support. Deepfakes plaster your face on lies—think revenge porn or election hoaxes. Voice clones deceive your family with fake pleas. Bots inundate social media with propaganda; synthetic videos obscure reality. AI isn’t just a tool—it’s a truth-wrecker.

How do we cope?

AI Optimist:

Misuse is the culprit, not AI’s DNA. We’re counterpunching—Adobe’s watermarking AI content; startups like Truepic detect fakes. Privacy tech, like federated learning, trains models without snooping your data. The EU AI Act nudges this—ethical guardrails for risky apps.

Bad actors innovate, sure, but so do we—think cat versus mouse. Users can fight back by verifying sources and shielding their information. Developers can armor up with detection tools and a privacy-first design.

AI Skeptic:

Armor is late. China’s social credit AI tracks citizens like lab rats. Deepfakes outpace detectors. Can we catch up before trust is toast?

AI Optimist:

Not solo—global pacts and corporate integrity are key. The EU’s a start; firms like OpenAI embed ethics early. For now, users must play detective—cross-check, secure data.

AI’s risks are loud, but not deaf to solutions. Users, remain vigilant—fact-check and strengthen. Developers, integrate ethics—detection and privacy technology. Governments, create global regulations. It’s a tool—wield it; don’t let it wield you.

Case Studies: AI’s Peaks and Pits

Success: JPMorgan Chase’s COiN

JPMorgan’s AI, COiN, is a quiet hero. It scans contracts—thousands of pages—in seconds, slicing 360,000 hours annually. It’s not just fast; it’s clear, with humans double-checking key calls. Costs drop, clients grin, and staff focus on strategy. Proof that AI, with oversight, can sing.

Failure: Amazon’s Hiring AI

Amazon’s 2018 hiring tool was a train wreck. Trained on male-skewed resumes, it snubbed women, even with fixes. Scrapped after a year, it screamed a truth: garbage data, rushed rollout, no dice. Lesson? AI needs rigor—test it, tweak it, or trash it.

AI’s glory or gore hinges on execution. Prep well, win big; skimp, and flop.

Note to Executives: Navigating AI’s Maze

To harness AI effectively without crashing, you need the right people, a solid understanding of its benefits and drawbacks, and a wise approach to risk management.

Here’s how to steer AI integration so it enhances your company without compromising your data, clients, or reputation (We’ll use the EU AI Act’s risk levels as a guide—not because it’s the law everywhere, but because it offers a sharp perspective on AI’s complexities):

1. Hire Sharp: Pick AI Experts Who Get It

Bring in AI talent who don’t just code, but strategize. Look for experts who:

Master the rules: They understand frameworks like the EU AI Act to ensure that your AI complies with legal and ethical standards. This isn’t just about dodging penalties—it’s about building systems that regulators and customers trust.

Spot trouble: These Engineers need to see where AI might fail—biased outputs, security holes, or breakdowns under stress.

Fix it before it breaks: Beyond spotting risks, they should have solid plans—diverse data to cut bias, encryption to lock down breaches, or human oversight for dicey calls.

2. Know the Game: Benefits and Risks, No Coding Required

You don’t need to debug code, but you do need to understand AI’s stakes. Here’s the rundown:

Benefits: AI can turbocharge your operations—faster processes, smarter insights, and happier customers. It’s your edge over the competition.

Risks: AI can backfire hard. Biased hiring tools, data leaks, or opaque decisions can spark lawsuits, ethical messes, or operational chaos. One misstep, and you’re explaining yourself to regulators—or the press.

3. Map the Risks: Understand guides like the EU AI Act’s Four Tiers

The EU AI Act sorts AI into four risk levels, from “don’t touch” to “go for it.” It’s not enforced globally, but it’s a brilliant lens for sizing up AI projects anywhere. Here’s how it breaks down, with tips for each:

Unacceptable Risk (The Danger Zone):

Some AI is too toxic, like systems that manipulate people or enable mass surveillance (e.g., dystopian tracking tools).

Action: Avoid it. The ethical and legal fallout outweighs any gain—think PR disasters or bans.High Risk (Tread Carefully):

AI in sensitive spots—hiring, healthcare, or critical systems—needs extra care. These can shape lives or livelihoods.

Action: Pile on safeguards—regular audits, human veto power, transparent records. Evaluate these projects as if your company’s future depends on it (it might).Limited Risk (Keep It Honest):

Like chatbots or AI-generated ads, systems that touch people need clarity, not heavy oversight.

Action: Label it as AI so users know what’s up. Monitor for quirks, but don’t overcomplicate it.Minimal Risk (Green Light, Mostly):

Low-stakes AI—like spam filters or movie suggestions—poses little harm.

Action: Use it freely, but keep an eye out. Even small glitches can annoy users or dent trust.

4. Tame the Beast: Keep AI in Check

Even with sharp experts and a risk map, AI can beat you. Here’s how to ride it smart:

Train everyone: Equip your team—not just techies—to spot AI weirdness (odd outputs, privacy red flags). Awareness saves headaches.

Explain it: Regulators and clients want to know how AI decides. Tools like SHAP make it readable. No explanation, no deployment.

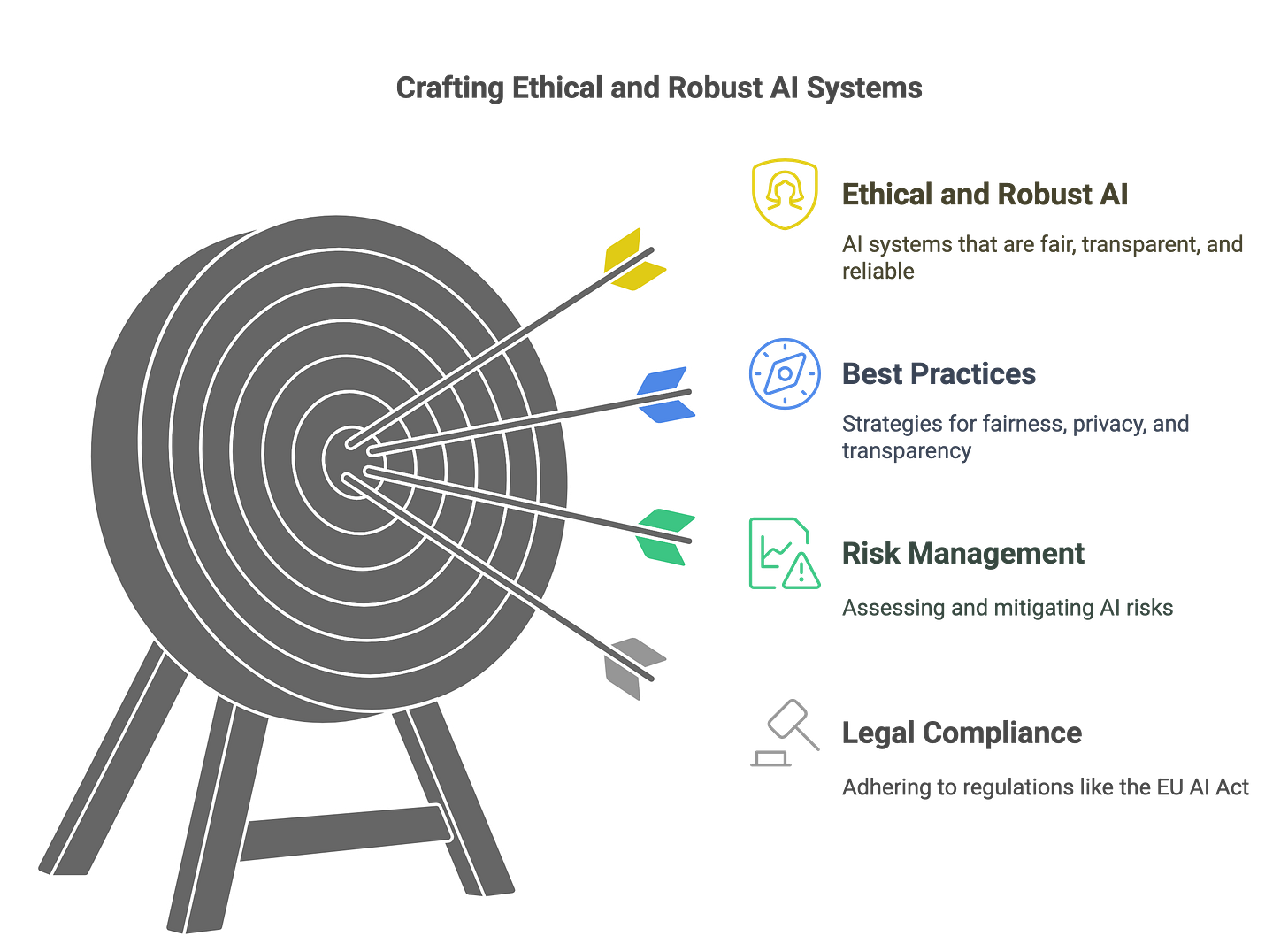

Note to AI Experts: Crafting Trustworthy Tech

As builders of AI’s future, raw skill isn’t enough. Your systems must be legal, ethical, and tough as nails.

Here’s how to craft AI that shines under scrutiny, using the EU AI Act as a guide—not a mandate—to highlight risks and best practices. These principles apply wherever you code, so let’s dive in.

1. Law Up: Navigate Rules with the EU AI Act as a Compass

Regulations are tightening, and the EU AI Act’s four risk levels are a smart way to gauge your responsibilities—even if your local laws differ. Here’s the scoop:

Unacceptable Risk (Off Limits):

AI that trashes rights—like manipulation tools or mass surveillance—is a no-go.

Action: Skip it. It’s a legal and ethical minefield—don’t touch it.High Risk (Heavy Duty):

AI in critical areas—healthcare, hiring, infrastructure—demands precision.

Action:Test relentlessly—stress it, break it, fix it.

Log everything—data, tweaks, outcomes—for accountability.

Keep humans in charge—AI advises, people decide.

Use XAI (e.g., LIME) to decode decisions. If it’s a black box, it’s not ready.

Limited Risk (Stay Clear):

AI that interacts with users—like chatbots—needs transparency.

Action: Tag it as AI. Users hate being duped—keep it real.Minimal Risk (Low Pressure):

Everyday AI—like recommendation engines—gets a lighter touch.

Action: Don’t slack—monitor for bugs. Small flaws can still sting.

Laws evolve, but the EU AI Act’s logic is universal. Align with it, and you’re ahead of the compliance curve.

2. Build Right: Best Practices for Trustworthy AI

Performance is table stakes—your AI needs to be fair, private, and open too. Here’s how:

Fairness: Bias hides in data and design. Fight it with diverse datasets, audits, and tools like TensorFlow Fairness. Check outputs—does it favor one group? Fix it.

Privacy: Protect user data with federated learning (no central hoard) or differential privacy (mask identities). Less data, less risk.

Transparency: Use XAI—SHAP, feature importance, to show how your model thinks. If a client asks “why,” you’d better have an answer.

3. Stress Test: Break It Before It Breaks You

AI flops can be fatal—think misdiagnoses or crashed drones. Catch cracks early:

Edge cases: Test the weird stuff—outliers, bad inputs, chaos. If it survives, it’s stronger.

Diverse testers: Different perspectives spot different flaws. Homogenous teams miss big biases.

Real-world sims: Red team it—attack your own system. Adversarial testing finds holes you didn’t know existed.

Your code is the bedrock—make it solid, make it just. Build AI that skeptics cannot crack, and you’ve mastered the craft.

Conclusion

AI is a double-edged sword—capable of slicing through inefficiencies to unlock unprecedented progress, yet sharp enough to cut us if we’re not careful. It can revolutionize work by automating the mundane and amplifying human creativity, supercharge learning with personalized tools, and enhance life with smarter solutions. But it’s not without pitfalls: bias can creep into decision-making, opacity can erode trust, and privacy breaches can shatter confidence. Stumble on these, and we risk more than just headlines—we risk losing control of the very systems we’ve built.

To the skeptics: take a breath. AI isn’t some rogue force barreling toward a dystopian future—it’s a reflection of us, shaped by our choices, values, and vigilance. The fears you hold—job losses, biased algorithms, surveillance creep—are real, but they’re not inevitable. They are challenges we can meet with smart policies, ethical guardrails, and a commitment to transparency. Understanding AI’s risks doesn’t mean rejecting it; it means wielding it wisely.

To the enthusiasts: this is your moment. You’re not just building code—you’re building trust. Every line you write, every model you train shapes the future. Embrace that responsibility. Prioritize fairness, bake in transparency, and design with privacy at the core. The skeptics’ concerns aren’t roadblocks; they’re your blueprint for better AI. Rise to the challenge, and you’ll turn doubt into confidence.

For everyone else—users, leaders, learners—stay curious and critical. AI’s potential is vast, but its impact depends on how we engage with it. Ask questions, demand clarity, and don’t settle for “it’s just the algorithm.” We’re not helpless passengers on this ride; we’re the drivers.

We’re not playing with fire—we’re forging a legacy. Let’s make it one we’re proud of.

This was a long read, but I enjoyed every minute of it. Accurate depiction of concerns and solutions💯