Building GenAI Apps #1: Unlock OCR and Table Data from Images, PDFs, and Webpages with Tesseract, Pandas and Unstructured

No More Manual Data Entry: Extract Text & Tables Like a Pro with Python

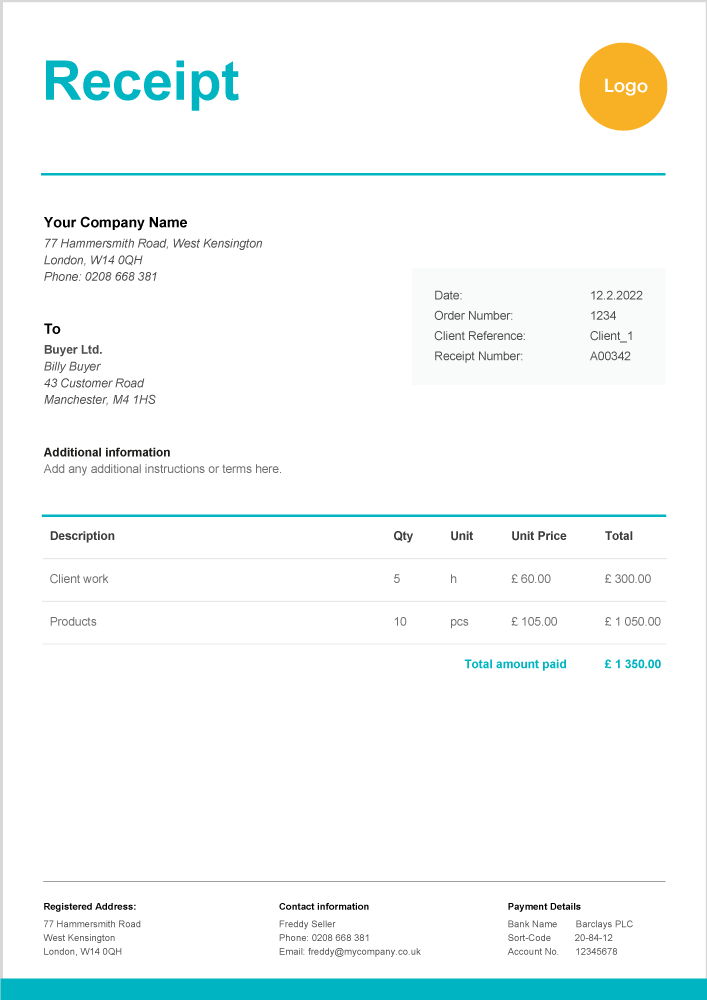

Nene is a small catering business owner, drowning in a sea of vendor invoices and receipts. Some are blurry photos snapped on her phone, others are PDFs clogging her inbox, and a few are screenshots from vendor websites. She wants to analyze her expenses to optimize her budget, but manually typing data from these documents is not an option. It’s a nightmare—time-consuming and prone to errors. One wrong number could skew her financial planning.

Sound like a challenge you’ve faced?

Whether you’re a business owner like Nene or a developer trying to automate tedious tasks, this tutorial is your ticket to freedom. We’ll use Optical Character Recognition (OCR) and table extraction to pull text and structured data from images, PDFs, and webpages—effortlessly. By the end, you’ll have a script that could save Nene hours and unlock powerful data for your own projects.

Why This Matters

OCR lets your computer “read” text from images, like a digital librarian. Table extraction, on the other hand, pulls structured data (such as invoice line items) from documents. Together, they’re a superpower for automating data entry, analyzing expenses, or building apps that process real-world documents.

Here’s what we’ll cover:

Extracting text from images using Tesseract via pytesseract.

Pulling tables and text from PDFs with Unstructured.

Scraping tables from webpages with pandas.

Combining everything into a versatile script.

An advanced step: Parsing invoices and receipts with Hugging Face’s transformers using

unstructuredio/donut-invoicesandunstructuredio/receipt-parsermodels.A challenge to turn it into an API with FastAPI.

Ready? Let’s get started!

What You’ll Need

Before we begin, ensure you have:

Python 3.8+ installed (download from python.org).

A terminal or command line to run commands.

A code editor (VSCode, PyCharm, or any text editor works).

We’ll install Tesseract, Poppler, and Python libraries—I’ll guide you every step of the way. No prior experience required!

Step 1: Installing the Tools

Let’s set up our toolkit. Open your terminal and run:

pip install unstructured[all-docs] pytesseract pandas transformersHere’s what each package does:

unstructured[all-docs]: A Python library for extracting structured data (text, tables) from PDFs, images, and more. The all-docs extra includes dependencies like pdfminer.six for PDF processing. Learn more at unstructured.io.

pytesseract: A Python wrapper for Tesseract, the open-source OCR engine for text extraction from images. Check out its GitHub page.

pandas: A data analysis library for handling tables and saving them as CSV. See pandas.pydata.org.

transformers: Hugging Face’s library for loading pre-trained models like unstructuredio/donut-invoices and unstructuredio/receipt-parser (used in the advanced step). Visit huggingface.co.

Install Tesseract and Poppler (for PDF-to-image conversion):

Ubuntu/Debian (or Colab/Linux environments):

sudo apt-get install tesseract-ocr poppler-utilsmacOS:

brew install tesseract popplerWindows:

Download and install Tesseract from this GitHub page and add it to your PATH.

Install Poppler via this guide or use conda install poppler.

For Google Colab, run:

!apt-get install tesseract-ocr poppler-utils

!pip install unstructured[all-docs] pytesseract pandas transformersIf you feel stuck, you can refer to the Tesseract docs and Poppler installation guide for assistance.

Step 2: Setting Up Your Workspace [OPTIONAL]

If you would like to isolate your environment to run this experiment, create a virtual environment to keep things organized:

python -m venv ocr-env

source ocr-env/bin/activateYou’re now in the ocr-env environment, ready to code without cluttering your system.

Step 3: Extracting Text from Images with Tesseract

Let’s start with Nene’s blurry receipt photo. We’ll use Tesseract via pytesseract to extract text from an image.

Try It: Read an Invoice/Receipt Image

Download a sample invoice image from Google Search results (e.g., receipt_sample.png). Save it in your project folder.

Here’s the code:

from PIL import Image

import pytesseract

# Load the image

image = Image.open("invoice_sample.png")

# Extract text with Tesseract

text = pytesseract.image_to_string(image, lang="eng")

# Show the first 50 characters

print("Extracted Text:", text[:50])What’s Happening?

PIL.Image.open()loads the image.pytesseract.image_to_string()runs Tesseract’s OCR to extract text.lang="eng"specifies English (see supported languages).

Tip: For better accuracy, preprocess the image:

image = image.convert("L") # Converts colored images to grayscaleIf you see “Tesseract not found,” ensure tesseract-ocr is installed and in your PATH.

Step 4: Extracting Tables from PDFs with Unstructured

Now, let’s tackle a PDF invoice. PDFs can be text-based or scanned images, and Unstructured handles both using its hi_res strategy, which leverages Tesseract for OCR.

Try It: Parse a PDF Invoice

Download a sample PDF invoice from a Google Search result (e.g., sample_invoice.pdf) and save it in your project folder.

Here’s the code:

from unstructured.partition.pdf import partition_pdf

from unstructured.documents.elements import Table

# Process the PDF

elements = partition_pdf(filename="sample_invoice.pdf", strategy="hi_res")

# Extract text and tables

for element in elements:

if isinstance(element, Table):

print("Table Found:", element.text)

elif hasattr(element, "text"):

print("Text:", element.text[:200])What’s Happening?

partition_pdf()splits the PDF into elements (text, tables, etc.).strategy="hi_res"uses Tesseract for OCR on image-based PDFs and enhances table detection.We print tables and text separately.

The unstructured[all-docs] package ensures compatibility with pdfminer.six and pdf2image. Learn more at unstructured.io/docs.

Step 5: Parsing Receipts with Unstructured

Receipts are messy—small fonts, crumpled paper, odd layouts. Unstructured uses Tesseract and advanced parsing to extract structured data like items and totals.

Try It: Extract Data from a Receipt Image

Code:

from unstructured.partition.image import partition_image

# Process the receipt image

elements = partition_image(filename="receipt_sample.jpg")

# Print extracted data

for element in elements:

print("Receipt Data:", element.text)What’s Happening?

partition_image()uses Tesseract for OCR and Unstructured’s parsing to extract structured data.The output includes receipt fields like items, prices, and totals.

Step 6: Scraping Webpage Tables with Pandas

What if Nene’s vendor posts prices online? We’ll use pandas to extract tables from webpages—no complex scraping required.

Try It: Extract a Web Table

Use a Wikipedia page with a table: List of Countries by Population.

import pandas as pd

# Scrape tables from the webpage

tables = pd.read_html("https://en.wikipedia.org/wiki/List_of_countries_by_population_(United_Nations)")

# Print the first table’s top rows

print("Web Table:", tables[0].head())What’s Happening?

pd.read_html()grabs all<table>elements and converts them to DataFrames.We print the first table’s first five rows using

head().

For trickier websites, consider better data extraction libraries such as BeautifulSoup/ Scrapy, but pandas is great for simple cases.

Step 7: Tying It All Together

Let’s combine Tesseract, Unstructured, and pandas into a single script that handles images, PDFs, or webpages—Nene’s dream tool for her catering business.

extract_data.py:

import os

from PIL import Image

import pytesseract

from unstructured.partition.pdf import partition_pdf

from unstructured.partition.image import partition_image

from unstructured.documents.elements import Table

import pandas as pd

def extract_data(source, source_type):

"""Extract text and tables from images, PDFs, or webpages."""

if source_type == "image":

image = Image.open(source).convert("L") # Grayscale for better OCR

text = pytesseract.image_to_string(image, lang="eng")

return {"text": text, "tables": []}

elif source_type == "pdf":

elements = partition_pdf(filename=source, strategy="hi_res")

text = " ".join([el.text for el in elements if hasattr(el, "text")])

tables = [el.text for el in elements if isinstance(el, Table)]

return {"text": text, "tables": tables}

elif source_type == "web":

tables = pd.read_html(source)

return {"text": "", "tables": [table.to_dict() for table in tables]}

else:

raise ValueError("Unknown source type")

# Test the script

if __name__ == "__main__":

# Image example

img_result = extract_data("receipt_sample.jpg", "image")

print("Image Result:", img_result)

# PDF example

pdf_result = extract_data("sample_invoice.pdf", "pdf")

print("PDF Result:", pdf_result)

# Web example

web_result = extract_data("https://en.wikipedia.org/wiki/List_of_countries_by_population_(United_Nations)", "web")

print("Web Result:", web_result)How It Works:

The

extract_data()function checks the input type (image,pdf, orweb).For images, it uses Tesseract with grayscale preprocessing.

For PDFs, it uses Unstructured’s

hi_resstrategy.For webpages, it uses

pandasfor table extraction.The output is a dictionary with

text(raw text) andtables(structured data).

Save as extract_data.py and run:

python extract_data.pyStep 8: Advanced Parsing with Hugging Face Models

For advanced users, let’s level up with Hugging Face’s transformers library, using the unstructuredio/donut-invoices model. These vision-based transformers are fine-tuned to extract structured data (e.g., vendor names, totals, line items) from invoices and receipts, offering more precision than raw OCR.

Here’s a separate script to demonstrate both:

from transformers import pipeline

from PIL import Image

def parse_with_huggingface(source, model_name):

"""Extract structured data from images using Hugging Face models."""

# Load the model pipeline

parser = pipeline("image-to-text", model=model_name)

# Load and preprocess the image (grayscale for better accuracy)

image = Image.open(source).convert("L")

# Run inference to extract structured data

result = parser(image)

return {"model": model_name, "data": result}

# Test the script

if __name__ == "__main__":

# Invoice parsing with unstructuredio/donut-invoices

invoice_result = parse_with_huggingface("/content/sample-reciept.png", "unstructuredio/donut-invoices")

print("Invoice Data:", invoice_result)

# Receipt parsing with unstructuredio/receipt-parser

receipt_result = parse_with_huggingface("/content/sample-reciept.png", "unstructuredio/donut-base-sroie")

print("Receipt Data:", receipt_result)Bonus Exercise: Build a FastAPI

To take this tutorial a step further, I encourage you to turn the extract_data.py script into a FastAPI application. Imagine Nene uploading a receipt or sending a webpage URL, and your API returns extracted data as JSON.

Wrapping Up

You’ve built a tool that transforms Nene’s chaotic invoices, receipts, and webpages into clean, usable data. With Tesseract, Unstructured, and pandas, you’ve mastered basic OCR and table extraction. The advanced Hugging Face models (donut-invoices and donut-base-sroie) takes it further, offering precise parsing for invoices and receipts.

For Nene, this means more time growing her catering business. For you, it’s a foundation for GenAI apps, from expense trackers to data pipelines.

Developers: Tweak these scripts, add error handling, or integrate with databases.

Business folks: Imagine the time and cost savings from automating data entry—more focus on strategy, less on spreadsheets.

What’s next? Try the FastAPI challenge, experiment with your own files, or join me for the next Building GenAI Apps tutorial, where we’ll explore more AI concepts.

Subscribe and let’s keep building!

Very well explained .