An Easy Way to Start with AI: Write Your First AI Script with Hugging Face

Did you know that Hugging Face hosts over 1 million models, datasets, and applications? Often dubbed the "GitHub for AI," this platform has revolutionized how people—beginners and experts alike—build and share AI projects.

In this guide, you’ll discover how to write your first AI script using Hugging Face, even if you’ve never coded before. By the end, you’ll have a solid grasp of machine learning basics, explore Hugging Face’s powerful tools, and create your own AI projects with hands-on tutorials. AI might sound intimidating, but Hugging Face makes it approachable and fun—let’s dive in!

What You'll Learn

Here’s what this article covers:

Hugging Face Basics: How this platform simplifies AI development for everyone.

Machine Learning Pipeline: The essential steps to build an AI model, explained simply.

Hugging Face Features: A deep dive into models, datasets, tokenizers, and more.

Hands-On Tutorials: Step-by-step guides to write scripts, explore data, and deploy projects.

Next Steps: Options to advance your skills, from using APIs to fine-tuning models.

No prior experience is required—just curiosity and a willingness to experiment (basic python programming is an asset advantage)!

Why It's Important: The Democratization of AI

AI isn’t just for PhDs or tech giants anymore. Platforms like Hugging Face are democratizing AI, making it possible for anyone to create intelligent applications. Whether you’re a student, hobbyist, or professional, you can now solve real-world problems—think chatbots, image recognition, or text analysis—without years of training or expensive hardware.

Hugging Face accelerates this by providing pre-built models, datasets, and tools, leveling the playing field and sparking innovation worldwide.

Understanding the Machine Learning Pipeline

To understand how useful Hugging Face is, let's begin by taking a look at the typical pipeline for AI model development from scratch.

Let’s break down the machine learning (ML) pipeline—the process of building an AI model. Imagine it like baking a cake:

Data Collection (Gathering Ingredients)

You need quality ingredients—like flour or sugar—to bake a cake. In AI, models need data (text, images, etc.) to learn from. Collecting data can be daunting ( Hugging Face provides thousands of ready-made datasets, such as movie reviews or social media posts).Data Preprocessing (Mixing the Batter)

Before baking, you mix and prepare ingredients. Similarly, data must be cleaned (e.g., removing typos) and formatted (e.g., turning text into numbers) so models can process it. This step ensures everything is consistent and usable.Model Selection (Choosing a Recipe)

Just as you pick a cake recipe, you select a model suited to your task—like text generation or image classification. Models are the "brains" of AI (Hugging Face offers pre-designed ones to save time).Training (Baking the Cake)

Training is where the magic happens: you feed data into the model, and it learns patterns—like how to spot positive reviews. This requires computing power and time, but pretrained models skip much of this effort.Evaluation (Tasting the Cake)

After baking, you taste-test. In AI, you check the model’s accuracy on new data. If it struggles (e.g., mislabels reviews), you tweak it or gather more data.Deployment (Serving the Cake)

Finally, you share your cake—or deploy your model into an app for others to use, like a website or chatbot (Hugging Spaces allows you to easily share your AI applications through graphical interfaces for users to try).

Hugging Face simplifies this pipeline by offering pretrained models and tools that handle complex steps, letting you focus on creativity rather than technical hurdles.

AI’s GitHub - How Hugging Face is Structured

Hugging Face earns its nickname "GitHub for AI" by being a hub where people share, discover, and collaborate on AI resources. Here’s how it streamlines the process:

Pretrained Models: Why bake from scratch when you can use a ready-made cake? Hugging Face provides models trained on massive datasets, saving you time and effort.

Datasets Galore: From tweets to scientific papers, its vast dataset library means you don’t have to hunt for data.

Collaboration: Like GitHub, it’s a community space—explore others’ projects, contribute, or get inspired.

Simplified Tools: Features like tokenizers and APIs handle tricky tasks, making AI development approachable.

For example, instead of spending weeks training a translation model, you can grab one from Hugging Face and start translating text in minutes. It’s fast, collaborative, and beginner-friendly.

Exploring Key Features

Hugging Face is packed with tools to make AI accessible. Here’s a closer look:

Models: A library of pretrained models for tasks like:

Text Classification: E.g., detecting spam emails.

Translation: E.g., English to French.

Image Tasks: E.g., identifying objects in photos.

Popular options include BERT (great for understanding text) and Stable Diffusion (for generating images).

Datasets: Thousands of collections, like the IMDB dataset (movie reviews) or Common Voice (speech data), ready for your projects.

Tokenizers: These split text into manageable chunks (tokens) for models. For instance, "I love AI" becomes ["I", "love", "AI"], with numbers assigned for processing.

Spaces: Build and share interactive apps—like a sentiment analyzer—without server management.

APIs: Use Hugging Face’s cloud powered by GPU servers to run models, perfect for quick tests or live apps.

Transformers Library: A Python toolkit with:

AutoClasses: Automatically load models and tokenizers.

Metrics: Evaluate performance easily.

Trainer: Simplify training custom models.

Imagine having a Swiss Army knife for AI—that’s Hugging Face in a nutshell.

Hands-On Tutorials

Let’s get coding! These tutorials work in any environment you prefer—your favorite Python text editor, IDE, or notebook setup. You’ll need Python installed (of course) and an internet connection to download libraries.

Tutorial 1: Sentiment Analysis with a Pretrained Model

Let’s analyze whether text is positive or negative.

Set Up:

Install the Transformers library:pip install transformersLoad the Model:

In a Python file or notebook, write:from transformers import pipeline sentiment_analyzer = pipeline("sentiment-analysis")Test It:

Analyze some sentences:texts = ["This is amazing!", "I’m so disappointed."] results = sentiment_analyzer(texts) for text, result in zip(texts, results): print(f"Text: {text} | Label: {result['label']} | Confidence: {result['score']:.3f}")Output:

You’ll see something like:Text: This is amazing! | Label: POSITIVE | Confidence: 0.999 Text: I’m so disappointed. | Label: NEGATIVE | Confidence: 0.997

This took just a few lines—Hugging Face’s pretrained models make it that simple!

Tutorial 2: Exploring a Dataset

Let’s load the IMDB dataset to see movie reviews.

Install the Library:

pip install datasetsLoad the Data:

from datasets import load_dataset imdb = load_dataset("imdb")Check a Sample:

print("Review:", imdb["train"][0]["text"]) print("Label:", "Positive" if imdb["train"][0]["label"] == 1 else "Negative")Output:

You will get an output like this:Review: A wonderful film... | Label: Positive

This dataset is great for experimenting or training models.

Tutorial 3: Training a Model

Let’s fine-tune a model on IMDB reviews.

Install Dependencies:

Ensure you have transformers and datasets installed.Preprocess Data:

from transformers import AutoTokenizer tokenizer = AutoTokenizer.from_pretrained("distilbert-base-uncased") def preprocess(examples): return tokenizer(examples["text"], padding="max_length", truncation=True, max_length=128) imdb_encoded = imdb.map(preprocess, batched=True)Set Up Training:

from transformers import AutoModelForSequenceClassification, Trainer, TrainingArguments model = AutoModelForSequenceClassification.from_pretrained("distilbert-base-uncased", num_labels=2) args = TrainingArguments(output_dir="./model", per_device_train_batch_size=8, num_train_epochs=1) trainer = Trainer(model=model, args=args, train_dataset=imdb_encoded["train"]) trainer.train()Test It:

After training (which may take time depending on your setup), test it:predictions = trainer.predict(imdb_encoded["test"]) print(predictions.predictions[0])

This adapts a model to your data—powerful and doable!

Tutorial 4: Deploying with Spaces

Share your work via Hugging Face Spaces.

Go to Spaces:

Visit huggingface.co/new-space and create a new space.Add Code:

Use this simple app (save asapp.py):import gradio as gr from transformers import pipeline sentiment = pipeline("sentiment-analysis") def analyze(text): result = sentiment(text)[0] return f"{result['label']} (Confidence: {result['score']:.3f})" gr.Interface(fn=analyze, inputs="text", outputs="text").launch()Deploy:

Uploadapp.py, installgradioandtransformersin the requirements, and launch your app online.

Now anyone can try your sentiment analyzer!

Choosing Your Path with Hugging Face

Hugging Face offers multiple ways to work with AI:

Pretrained Models: Download and run locally. It is great for offline projects but requires you to set up your local or rented GPU/CPU servers & storage.

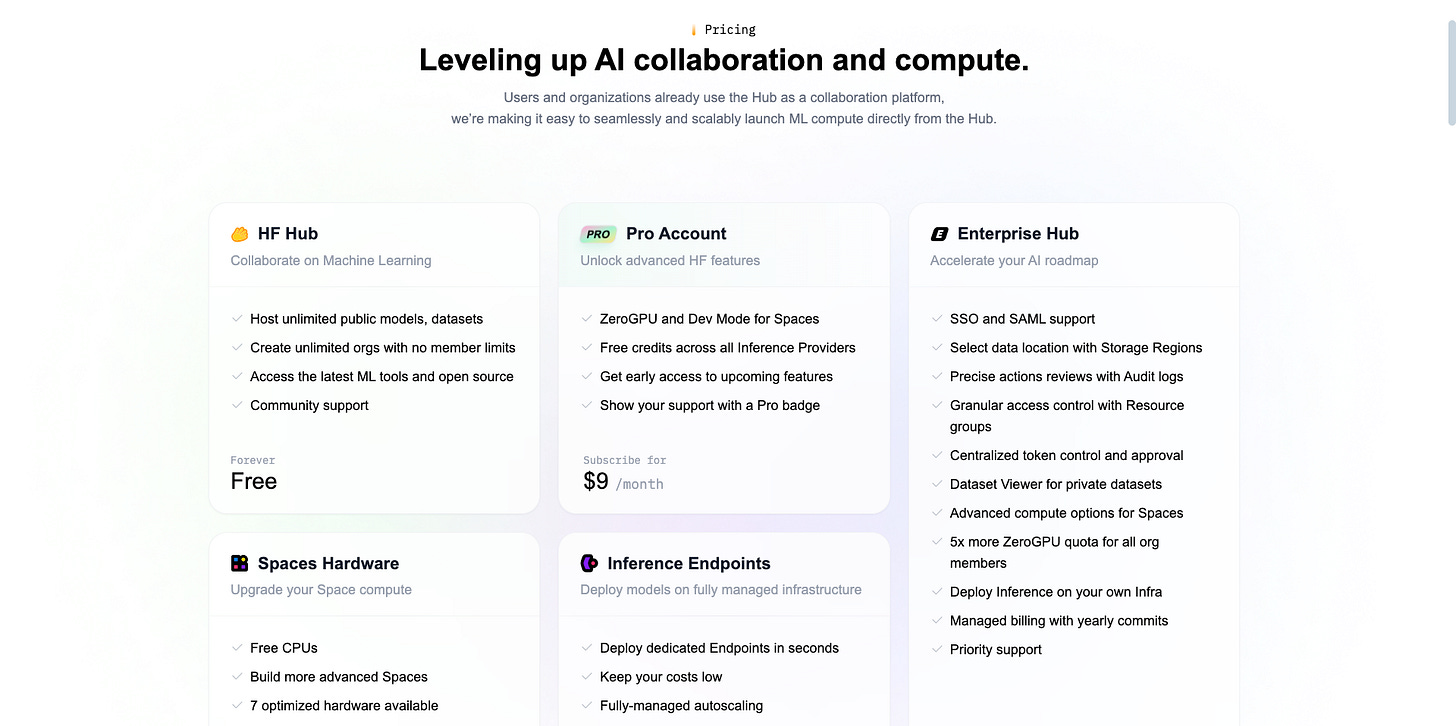

APIs: Use Hugging Face’s cloud for quick, GPU-powered predictions—ideal for apps but might require a subscription (see pricing).

Training: Build a model from scratch—best for unique/ custom tasks but resource-heavy.

Fine-Tuning: Tweak existing models—perfect for customizing with less effort.

Beginners should start with pretrained models or fine-tuning—they’re fast and effective.

Next Steps

You’ve gone from zero to building AI with Hugging Face! Keep going by:

Experimenting: Swap datasets or models in the tutorials.

Learning: Explore Hugging Face’s docs.

Creating: Build a project—like a chatbot—and share it.

The key? Start small, play around, and learn from mistakes. AI is yours to explore!

Hugging Face opens the door to AI for everyone. With its models, datasets, and tools, you can go from a curious beginner to a confident creator. So, grab your keyboard, write that first script, and see where your imagination takes you!

What will you build next? Let me know in the comments so I can provide guidance.

Please let me know if you like my Data Bender (Avatar) YouTube intro. Should I continue to include it in future videos?😂